aws.osml.photogrammetry

Many users need to estimate the geographic position of an object found in a georeferenced image. The osml-imagery-toolkit provides open source implementations of the image-to-world and world-to-image equations for some common replacement sensor models. These sensor models work with many georeferenced imagery types and do not require orthorectification of the image. In the current implementation support is provided for:

Rational Polynomials: Models based on rational polynomials specified using RSM and RPC metadata found in NITF TREs

SAR Sensor Independent Models: Models as defined by the SICD and SIDD standards with metadata found in the NITF XML data segment.

Perspective and Affine Projections: Simple matrix based projections that can be computed from geolocations of the 4 image corners or tags found in GeoTIFF images.

Note that the current implementation does not support the RSM Grid based sensor models or the adjustable parameter options. These features will be added in a future release.

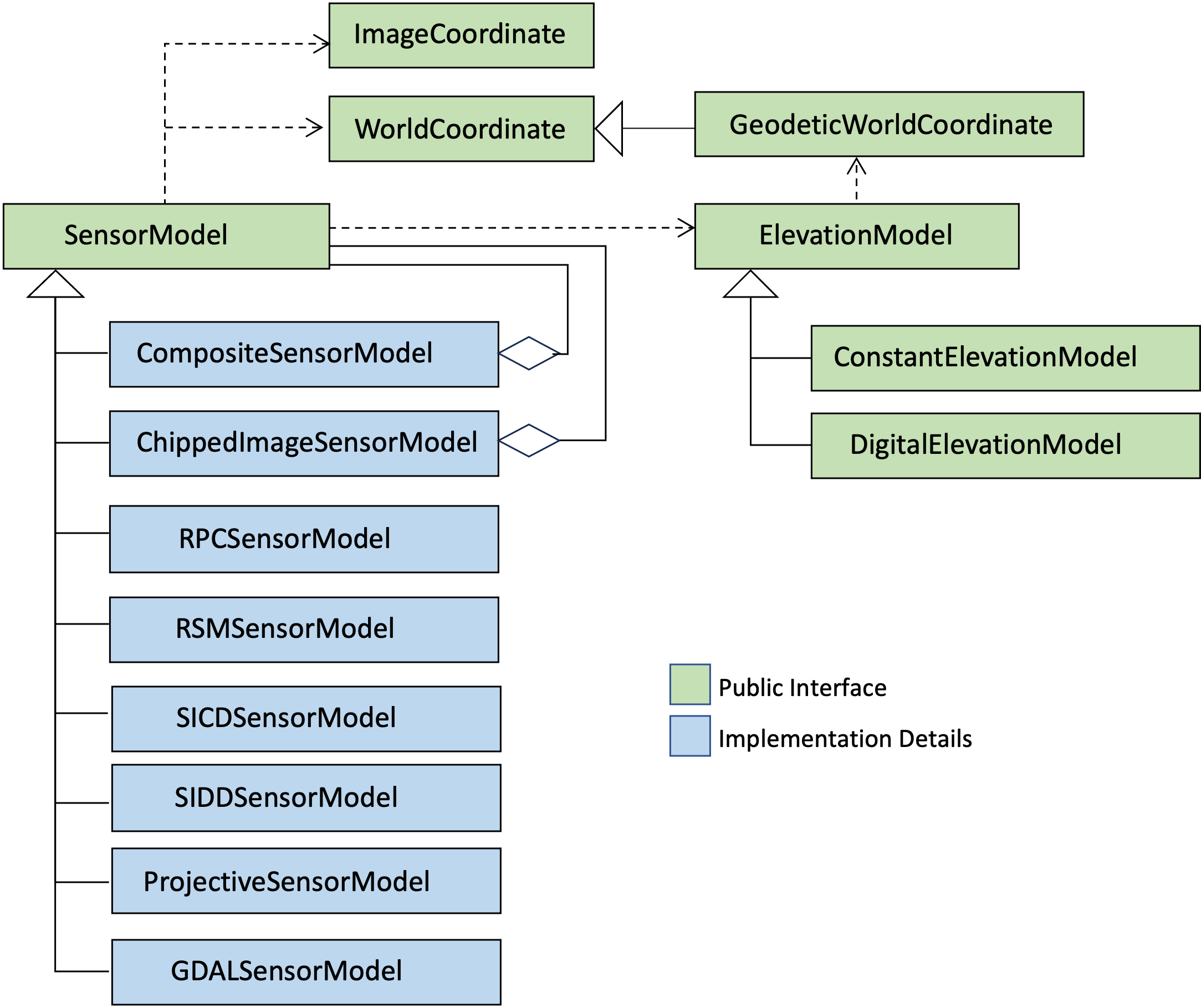

Class diagram of the aws.osml.photogrammetry package showing public and private classes.

Geolocating Image Pixels: Basic Examples

Applications do not typically interact with a specific sensor model implementation directly. Instead, they let the SensorModel abstraction encapsulate the details and rely on the image IO utilities to construct the appropriate type given the available metadata.

dataset, sensor_model = load_gdal_dataset("./imagery/sample.nitf")

lon_degrees = -77.404453

lat_degrees = 38.954831

meters_above_ellipsoid = 100.0

# Note the GeodeticWorldCoordinate is (longitude, latitude, elevation) with longitude and latitude in **radians**

# and elevation in meters above the WGS84 ellipsoid. The resulting ImageCoordinate is returned in (x, y) pixels.

image_location = sensor_model.world_to_image(

GeodeticWorldCoordinate([radians(lon_degrees),

radians(lat_degrees),

meters_above_ellipsoid]))

dataset, sensor_model = load_gdal_dataset("./imagery/sample.nitf")

width = dataset.RasterXSize

height = dataset.RasterYSize

image_corners = [[0, 0], [width, 0], [width, height], [0, height]]

geo_image_corners = [sensor_model.image_to_world(ImageCoordinate(corner))

for corner in image_corners]

# GeodeticWorldCoordinates have custom formatting defined that supports a variety of common output formats.

# The example shown below will produce a ddmmssXdddmmssY formatted coordinate (e.g. 295737N0314003E)

for geodetic_corner in geo_image_corners:

print(f"{geodetic_corner:%ld%lm%ls%lH%od%om%os%oH}")

Geolocating Image Pixels: Addition of an External Elevation Model

The image-to-world calculation can optionally use an external digital elevation model (DEM) when geolocating points on an image. How the elevation model will be used varies by sensor model but examples include:

Using DEM elevations as a constraint during iterations of a rational polynomial camera’s image-to-world calculation.

Computing the intersection of a R/Rdot contour with a DEM for sensor independent SAR models.

All of these approaches make the fundamental assumption that the pixel lies on the terrain surface. If a DEM is not available we assume a constant surface with elevation provided in the image metadata.

ds, sensor_model = load_gdal_dataset("./imagery/sample.nitf")

# This sets up an external elevation model assuming terrain data is named something like:

# ./SRTM/dted/w044/s23.dt2.

elevation_model = DigitalElevationModel(

GenericDEMTileSet(format_spec="format_spec="dted/%oh%od/%lh%ld.dt2"),

GDALDigitalElevationModelTileFactory("./SRTM"))

# Note the order of ImageCoordinate is (x, y) and the resulting geodetic coordinate is

# (longitude, latitude, elevation) with longitude and latitude in **radians** and elevation in meters.

geodetic_location_of_image_center = sensor_model.image_to_world(

ImageCoordinate([ds.RasterXSize/2, ds.RasterYSize/2]),

elevation_model=elevation_model)

External References

Manual of Photogrammetry: https://www.amazon.com/Manual-Photogrammetry-PhD-Chris-McGlone/dp/1570830991

NITF Compendium of Controlled Support Data Extensions: https://nsgreg.nga.mil/doc/view?i=5417

The Replacement Sensor Model (RSM): Overview, Status, and Performance Summary: https://citeseerx.ist.psu.edu/doc_view/pid/c25de8176fe790c28cf6e1aff98ecdea8c726c96

RPC Whitepaper: https://users.cecs.anu.edu.au/~hartley/Papers/cubic/cubic.pdf

SICD Volume 3, Image Projections Description Document: https://nsgreg.nga.mil/doc/view?i=5383

WGS84 Standard: https://nsgreg.nga.mil/doc/view?i=4085

APIs

- class aws.osml.photogrammetry.ChippedImageSensorModel(original_image_coordinates: List[ImageCoordinate], chipped_image_coordinates: List[ImageCoordinate], full_image_sensor_model: SensorModel)[source]

Bases:

SensorModelThis sensor model should be used when we have pixels for only a portion of the image, but we have a sensor model that describes the full image. In this case the image coordinates need to be converted to those of the full image before being used by the full sensor model.

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function returns the longitude, latitude, elevation world coordinate associated with the x, y coordinate of any pixel in the image.

- Parameters:

image_coordinate – the x, y image coordinate

elevation_model – an elevation model used to transform the coordinate

options – a dictionary of options that will be passed on to the full image sensor model

- Returns:

the longitude, latitude, elevation world coordinate

- world_to_image(world_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This function returns the x, y image coordinate associated with a given longitude, latitude, elevation world coordinate.

- Parameters:

world_coordinate – the longitude, latitude, elevation world coordinate

- Returns:

the x, y image coordinate

- class aws.osml.photogrammetry.CompositeSensorModel(approximate_sensor_model: SensorModel, precision_sensor_model: SensorModel)[source]

Bases:

SensorModelA CompositeSensorModel is a SensorModel that combines an approximate but fast model with an accurate but slower model.

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function first calls the approximate model’s image_to_world function to get an initial guess and then passes that information to the more accurate model through the options parameter’s ‘initial_guess’ and ‘initial_search_distance’ options.

- Parameters:

image_coordinate – the x, y image coordinate

elevation_model – an optional elevation model used to transform the coordinate

options – the options that will be augmented and then passed along

- Returns:

the longitude, latitude, elevation world coordinate

- world_to_image(world_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This is just a pass through to the more accurate sensor model’s world_to_image. These calculations tend to be quicker so there is no need to involve the approximate model.

- Parameters:

world_coordinate – the longitude, latitude, elevation world coordinate

- Returns:

the x, y image coordinate

- class aws.osml.photogrammetry.ConstantElevationModel(constant_elevation: float)[source]

Bases:

ElevationModelA constant elevation model with a single value for all longitude, latitude.

- set_elevation(world_coordinate: GeodeticWorldCoordinate) None[source]

Updates world coordinate’s elevation to match the constant elevation.

- Parameters:

world_coordinate – the coordinate to update

- Returns:

None

- describe_region(world_coordinate: GeodeticWorldCoordinate) ElevationRegionSummary | None[source]

Get a summary of the region near the provided world coordinate

- Parameters:

world_coordinate – the coordinate at the center of the region of interest

- Returns:

[min elevation value, max elevation value, no elevation data value, post spacing]

- class aws.osml.photogrammetry.DigitalElevationModel(tile_set: DigitalElevationModelTileSet, tile_factory: DigitalElevationModelTileFactory, raster_cache_size: int = 10)[source]

Bases:

ElevationModelA Digital Elevation Model (DEM) is a representation of the topographic surface of the Earth. Theoretically these representations exclude trees, buildings, and any other surface objects but in practice elevations from those objects are likely captured by the sensors use to capture the elevation data.

These datasets are normally stored as a pre-tiled collection of images with a well established resolution and coverage.

- set_elevation(geodetic_world_coordinate: GeodeticWorldCoordinate) None[source]

This method updates the elevation component of a geodetic world coordinate to match the surface elevation at the provided latitude and longitude. Note that if the DEM does not have elevation values for this region or if there is an error loading the associated image the elevation will be unchanged.

- Parameters:

geodetic_world_coordinate – the coordinate to update

- Returns:

None

- describe_region(geodetic_world_coordinate: GeodeticWorldCoordinate) ElevationRegionSummary | None[source]

Get a summary of the region near the provided world coordinate

- Parameters:

geodetic_world_coordinate – the coordinate at the center of the region of interest

- Returns:

a summary of the elevation data in this tile

- get_interpolation_grid(tile_path: str) Tuple[RectBivariateSpline | None, SensorModel | None, ElevationRegionSummary | None][source]

This method loads and converts an array of elevation values into a class that can interpolate values that lie between measured elevations. The sensor model is also returned to allow us to precisely identify the location in the grid of a world coordinate.

Note that the results of this method are cached by tile_id. It is very common for the set_elevation() method to be called multiple times for locations that are in a narrow region of interest. This will prevent unnecessary repeated loading of tiles.

- Parameters:

tile_path – the location of the tile to load

- Returns:

the cached interpolation object, sensor model, and summary

- class aws.osml.photogrammetry.DigitalElevationModelTileFactory[source]

Bases:

ABCThis class defines an abstraction that is able to load a tile and convert it to a Numpy array of elevation data along with a SensorModel that can be used to identify the grid locations associated with a latitude, longitude.

- Returns:

None

- abstract get_tile(tile_path: str) Tuple[Any | None, SensorModel | None, ElevationRegionSummary | None][source]

Retrieve a numpy array of elevation values and a sensor model.

TODO: Replace Any with numpy.typing.ArrayLike once we move to numpy >1.20

- Parameters:

tile_path – the location of the tile to load

- Returns:

an array of elevation values, a sensor model, and a summary

- class aws.osml.photogrammetry.DigitalElevationModelTileSet[source]

Bases:

ABCThis class defines an abstraction that is capable of identifying which elevation tile in a DEM contains the elevations for a given world coordinate. It is common to split a DEM with global coverage up into a collection of files with well understood coverage areas. Those files may follow a simple naming convention but could be cataloged in an external spatial index. This class abstracts those details away from the DigitalElevationModel allowing us to easily extend this design to various tile sets.

- Returns:

None

- abstract find_tile_id(geodetic_world_coordinate: GeodeticWorldCoordinate) str | None[source]

Converts the latitude, longitude of the world coordinate into a tile path.

- Parameters:

geodetic_world_coordinate – GeodeticWorldCoordinate = the world coordinate of interest

- Returns:

the tile path or None if the DEM does not have coverage for this location

- class aws.osml.photogrammetry.ElevationModel[source]

Bases:

ABCAn elevation model associates a height z for a given x, y of a world coordinate. It typically provides information about the terrain associated with longitude, latitude locations of an ellipsoid, but it can also be used to model surfaces for other ground domains.

- abstract set_elevation(world_coordinate: GeodeticWorldCoordinate) None[source]

This method updates the elevation component of a world coordinate to match the surface elevation at longitude, latitude.

- Parameters:

world_coordinate – the coordinate to update

- Returns:

None

- abstract describe_region(world_coordinate: GeodeticWorldCoordinate) ElevationRegionSummary | None[source]

Get a summary of the region near the provided world coordinate

- Parameters:

world_coordinate – the coordinate at the center of the region of interest

- Returns:

the summary information

- class aws.osml.photogrammetry.ElevationRegionSummary(min_elevation: float, max_elevation: float, no_data_value: int, post_spacing: float)[source]

Bases:

objectThis class contains a general summary of an elevation tile.

- class aws.osml.photogrammetry.GDALAffineSensorModel(geo_transform: List, proj_wkt: str | None = None)[source]

Bases:

SensorModelGDAL provides a simple affine transform used to convert XY pixel values to longitude, latitude. See https://gdal.org/tutorials/geotransforms_tut.html

transform[0] x-coordinate of the upper-left corner of the upper-left pixel. transform[1] w-e pixel resolution / pixel width. transform[2] row rotation (typically zero). transform[3] y-coordinate of the upper-left corner of the upper-left pixel. transform[4] column rotation (typically zero). transform[5] n-s pixel resolution / pixel height (negative value for a north-up image).

The necessary transform matrix can be obtained from a dataset using the GetGeoTransform() operation.

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function returns the longitude, latitude, elevation world coordinate associated with the x, y coordinate of any pixel in the image. The GDAL Geo Transforms do not provide any information about elevation, so it will always be 0.0 unless the optional elevation model is provided.

- Parameters:

image_coordinate – the x, y image coordinate

elevation_model – an optional elevation model used to transform the coordinate

options – an optional dictionary of hints, does not support any hints

- Returns:

the longitude, latitude, elevation world coordinate

- world_to_image(world_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This function returns the x, y image coordinate associated with a given longitude, latitude, elevation world coordinate.

- Parameters:

world_coordinate – the longitude, latitude, elevation world coordinate

- Returns:

the x, y image coordinate

- class aws.osml.photogrammetry.GenericDEMTileSet(format_spec: str = '%od%oh/%ld%lh.dt2', min_latitude_degrees: float = -90.0, max_latitude_degrees: float = 90.0, min_longitude_degrees: float = -180.0, max_longitude_degrees: float = 180.0)[source]

Bases:

DigitalElevationModelTileSetA generalizable tile set with a naming convention that can be described as a format string.

- find_tile_id(geodetic_world_coordinate: GeodeticWorldCoordinate) str | None[source]

This method creates tile IDs that based on the format string provided.

- Parameters:

geodetic_world_coordinate – the world coordinate of interest

- Returns:

the tile path or None if the DEM does not have coverage for this location

- class aws.osml.photogrammetry.GeodeticWorldCoordinate(coordinate: _SupportsArray[dtype[Any]] | _NestedSequence[_SupportsArray[dtype[Any]]] | bool | int | float | complex | str | bytes | _NestedSequence[bool | int | float | complex | str | bytes] | None = None)[source]

Bases:

WorldCoordinateA GeodeticWorldCoordinate is an WorldCoordinate where the x,y,z components can be interpreted as longitude, latitude, and elevation. It is important to note that longitude, and latitude are in radians while elevation is meters above the ellipsoid.

This class uses a custom format specification for a geodetic coordinate uses % directives similar to datetime. These custom directives can be combined as needed with literal values to produce a wide range of output formats. For example, ‘%ld%lm%ls%lH%od%om%os%oH’ will produce a ddmmssXdddmmssY formatted coordinate. The first half, ddmmssX, represents degrees, minutes, and seconds of latitude with X representing North or South (N for North, S for South). The second half, dddmmssY, represents degrees, minutes, and seconds of longitude with Y representing East or West (E for East, W for West), respectively.

Directive

Meaning

Notes

%L

latitude in decimal radians

1

%l

latitude in decimal degrees

1

%ld

latitute degrees

2

%lm

latitude minutes

%ls

latitude seconds

%lh

latitude hemisphere (n or s)

%lH

latitude hemisphere uppercase (N or S)

%O

longitude in decimal radians

1

%o

longitude in decimal degrees

1

%od

longitude degrees

2

%om

longitude minutes

%os

longitude seconds

%oh

longitude hemisphere (e or w)

%oH

longitude hemisphere uppercase (E or W)

%E

elevation in meters

%%

used to represent a literal % in the output

Notes:

Formatting in decimal degrees or radians will be signed values

Formatting for the degrees, minutes, seconds will always be unsigned assuming hemisphere will be included

Any unknown directives will be ignored

- to_dms_string() str[source]

Outputs this coordinate in a format ddmmssXdddmmssY. The first half, ddmmssX, represents degrees, minutes, and seconds of latitude with X representing North or South (N for North, S for South). The second half, dddmmssY, represents degrees, minutes, and seconds of longitude with Y representing East or West (E for East, W for West), respectively.

- Returns:

the formatted coordinate string

- class aws.osml.photogrammetry.INCAProjectionSet(r_ca_scp: float, inca_time_coa_poly: Polynomial, drate_sf_poly: Polynomial2D, coa_time_poly: Polynomial2D, arp_poly: PolynomialXYZ, delta_arp: ndarray = array([0., 0., 0.]), delta_varp: ndarray = array([0., 0., 0.]), range_bias: float = 0.0)[source]

Bases:

COAProjectionSet

- class aws.osml.photogrammetry.ImageCoordinate(coordinate: _SupportsArray[dtype[Any]] | _NestedSequence[_SupportsArray[dtype[Any]]] | bool | int | float | complex | str | bytes | _NestedSequence[bool | int | float | complex | str | bytes] | None = None)[source]

Bases:

objectThis image coordinate system convention is defined as follows. The upper left corner of the upper left pixel of the original full image has continuous image coordinates (pixel position) (r, c) = (0.0,0.0) , and the center of the upper left pixel has continuous image coordinates (r, c) = (0.5,0.5) . The first row of the original full image has discrete image row coordinate R = 0 , and corresponds to a range of continuous image row coordinates of r = [0,1) . The first column of the original full image has discrete image column coordinate C = 0 , and corresponds to a range of continuous image column coordinates of c = [0,1) . Thus, for example, continuous image coordinates (r, c) = (5.6,8.3) correspond to the sixth row and ninth column of the original full image, and discrete image coordinates (R,C) = (5,8).

- class aws.osml.photogrammetry.PFAProjectionSet(scp_ecf: WorldCoordinate, polar_ang_poly, spatial_freq_sf_poly, coa_time_poly: Polynomial2D, arp_poly: PolynomialXYZ, delta_arp: ndarray = array([0., 0., 0.]), delta_varp: ndarray = array([0., 0., 0.]), range_bias: float = 0.0)[source]

Bases:

COAProjectionSetThis Center Of Aperture (COA) Projection set is to be used with a range azimuth image grid (RGAZIM) and polar formatted (PFA) phase history data. See section 4.1 of the SICD Specification Volume 3.

- class aws.osml.photogrammetry.PlaneProjectionSet(scp_ecf: WorldCoordinate, image_plane_urow: ndarray, image_plane_ucol: ndarray, coa_time_poly: Polynomial2D, arp_poly: PolynomialXYZ, delta_arp: ndarray = array([0., 0., 0.]), delta_varp: ndarray = array([0., 0., 0.]), range_bias: float = 0.0)[source]

Bases:

COAProjectionSet

- class aws.osml.photogrammetry.Polynomial2D(coef: _SupportsArray[dtype[Any]] | _NestedSequence[_SupportsArray[dtype[Any]]] | bool | int | float | complex | str | bytes | _NestedSequence[bool | int | float | complex | str | bytes])[source]

Bases:

objectThis class contains coefficients for a two-dimensional polynomial.

- class aws.osml.photogrammetry.PolynomialXYZ(x_polynomial: Polynomial, y_polynomial: Polynomial, z_polynomial: Polynomial)[source]

Bases:

objectThis class is an aggregation 3 one-dimensional polynomials all with the same input variable. The result of evaluating this class on the input variable is an [x, y, z] vector.

- class aws.osml.photogrammetry.ProjectiveSensorModel(world_coordinates: List[GeodeticWorldCoordinate], image_coordinates: List[ImageCoordinate])[source]

Bases:

SensorModelThis sensor model is used when we have a set of 2D tie point correspondences (longitude, latitude) -> (x, y) for an image.

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function returns the longitude, latitude, elevation world coordinate associated with the x, y coordinate of any pixel in the image.

- Parameters:

image_coordinate – the x, y image coordinate

elevation_model – optional elevation model used to transform the coordinate

options – optional dictionary of hints, this camera does not support any hints

- Returns:

the longitude, latitude, elevation world coordinate

- world_to_image(world_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This function returns the x, y image coordinate associated with a given longitude, latitude, elevation world coordinate.

- Parameters:

world_coordinate – the longitude, latitude, elevation world coordinate

- Returns:

the x, y image coordinate

- class aws.osml.photogrammetry.RGAZCOMPProjectionSet(scp_ecf: WorldCoordinate, az_scale_factor: float, coa_time_poly: Polynomial2D, arp_poly: PolynomialXYZ, delta_arp: ndarray = array([0., 0., 0.]), delta_varp: ndarray = array([0., 0., 0.]), range_bias: float = 0.0)[source]

Bases:

COAProjectionSet

- class aws.osml.photogrammetry.RPCPolynomial(coefficients: List[float])[source]

Bases:

object- evaluate(normalized_world_coordinate: WorldCoordinate) float[source]

This function evaluates the polynomial for the given world coordinate by summing up the result of applying each coefficient to the world coordinate components. Note that these polynomials are usually defined with the assumption that the world coordinate has been normalized.

- Parameters:

normalized_world_coordinate – the world coordinate

- Returns:

the resulting value

- class aws.osml.photogrammetry.RPCSensorModel(err_bias: float, err_rand: float, line_off: float, samp_off: float, lat_off: float, long_off: float, height_off: float, line_scale: float, samp_scale: float, lat_scale: float, long_scale: float, height_scale: float, line_num_poly: RPCPolynomial, line_den_poly: RPCPolynomial, samp_num_poly: RPCPolynomial, samp_den_poly: RPCPolynomial)[source]

Bases:

SensorModelA Rational Polynomial Camera (RPC) sensor model is one where the world to image transform is approximated using a ratio of polynomials. The polynomials capture specific relationships between the latitude, longitude, and elevation and the image pixels.

These cameras were common approximations for many years but started to be phased out in 2014 in favor of the more general Replacement Sensor Model (RSM). It is not uncommon to find historical imagery or imagery from older sensors still operating today that contain the metadata for these sensor models.

- world_to_image(geodetic_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This function transforms a geodetic world coordinate (longitude, latitude, elevation) into an image coordinate (x, y).

- Parameters:

geodetic_coordinate – the world coordinate (longitude, latitude, elevation)

- Returns:

the resulting image coordinate (x,y)

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function implements the image to world transform by iteratively invoking world to image within a minimization routine to find a matching image coordinate. The longitude and latitude parameters are searched independently while the elevation of the world coordinate comes from the elevation model.

- Parameters:

image_coordinate – the image coordinate (x, y)

elevation_model – an optional elevation model used to transform the coordinate

options – optional hints, supports initial_guess and initial_search_distance

- Returns:

the corresponding world coordinate

- class aws.osml.photogrammetry.RSMContext(ground_domain: RSMGroundDomain, image_domain: RSMImageDomain)[source]

Bases:

objectThe RSM context contains information necessary to apply and interpret results from the sensor models on an image.

This current implementation only covers the ground domain and image domains necessary for georeferencing but it can be expanded as needed to support other RSM functions.

TODO: Implement the TimeContext which can be used to identify the collection time of any x, y image coordinate TODO: Implement the IlluminationContext which can be used to predict shadow information on an image TODO: Implement the TrajectoryModel which captures the sensors 3D position in relation to the image

- class aws.osml.photogrammetry.RSMGroundDomain(ground_domain_form: RSMGroundDomainForm, ground_domain_vertices: List[WorldCoordinate], rectangular_coordinate_origin: WorldCoordinate | None = None, rectangular_coordinate_unit_vectors: Any | None = None, ground_reference_point: WorldCoordinate | None = None)[source]

Bases:

objectThe RSM ground domain is an approximation of the ground area where the RSM representation is valid. It is a solid in three-dimensional space bounded by a hexahedron with quadrilateral faces specified using eight three-dimensional vertices.

It is typically constructed from values in the NITF RSMID TRE. For more information see section 5.6 of STDI-0002 Volume 1 Appendix U.

- geodetic_to_ground_domain_coordinate(geodetic_coordinate: GeodeticWorldCoordinate) WorldCoordinate[source]

This function converts WGS-84 geodetic world coordinate into a world coordinate that uses the domain coordinate system for this sensor model.

- Parameters:

geodetic_coordinate – the WGS-84 longitude, latitude, elevation

- Returns:

the x, y, z domain coordinate

- ground_domain_coordinate_to_geodetic(ground_domain_coordinate: WorldCoordinate) GeodeticWorldCoordinate[source]

This function converts an x, y, z coordinate defined in the ground domain of this sensor model into a WGS-84 longitude, latitude, elevation coordinate.

- Parameters:

ground_domain_coordinate – the x, y, z domain coordinate

- Returns:

the WGS-84 longitude, latitude, elevation coordinate

- class aws.osml.photogrammetry.RSMGroundDomainForm(value)[source]

Bases:

EnumThe RSMGroundDomainForm defines how world coordinates (x, y, z) should be interpreted in this sensor model.

If geodetic, X, Y, and Z, correspond to longitude, latitude, and height above the ellipsoid, respectively. Longitude is specified east of the prime meridian, and latitude is specified north of the equator. Units for X, Y, and Z, are radians, radians, and meters, respectively. The range for Y is (-pi/2 to pi/2). The range for X is (-pi to pi) when GEODETIC, and (0 to 2pi) when GEODETIC_2PI. The latter is specified when the RSM ground domain contains a longitude value near pi radians.

If RECTANGULAR, X, Y, and Z correspond to a coordinate system that is defined as an offset from and rotation about the WGS 84 Rectangular coordinate system.

For more information see the GRNDD TRE field definition in section 5.9 of STDI-0002 Volume 1 Appendix U.

- GEODETIC = 'G'

- GEODETIC_2PI = 'H'

- RECTANGULAR = 'R'

- class aws.osml.photogrammetry.RSMImageDomain(min_row: int, max_row: int, min_column: int, max_column: int)[source]

Bases:

objectThis RSM image domain is a rectangle defined by the minimum and maximum discrete row coordinate values, and the minimum and maximum discrete column coordinate values. It is typically constructed from values in the NITF RSMID TRE. For more information see section 5.5 of STDI-0002 Volume 1 Appendix U.

- class aws.osml.photogrammetry.RSMLowOrderPolynomial(coefficients: List[float])[source]

Bases:

objectThis is an implementation of a “low order” polynomial used when generating coarse image row and column coordinates from a world coordinate. For additional information see Section 6.2 of STDI-0002 Volume 1 Appendix U.

- evaluate(world_coordinate: WorldCoordinate)[source]

This function evaluates the polynomial for the given world coordinate by summing up the result of applying each coefficient to the world coordinate components.

- Parameters:

world_coordinate – the world coordinate

- Returns:

the resulting value

- class aws.osml.photogrammetry.RSMPolynomial(max_power_x: int, max_power_y: int, max_power_z: int, coefficients: List[float])[source]

Bases:

objectThis is an implementation of a general polynomial that can be applied to a world coordinate (i.e. an x,y,z vector). For additional information see Section 7.2 of STDI-0002 Volume 1 Appendix U or Section 10.3.3.1.1 of the Manual of Photogrammetry Sixth Edition.

- evaluate(normalized_world_coordinate: WorldCoordinate) float[source]

This function evaluates the polynomial for the given world coordinate by summing up the result of applying each coefficient to the world coordinate components. Note that these polynomials are usually defined with the assumption that the world coordinate has been normalized.

- Parameters:

normalized_world_coordinate – the world coordinate

- Returns:

the resulting value

- class aws.osml.photogrammetry.RSMPolynomialSensorModel(context: RSMContext, section_row: int, section_col: int, row_norm_offset: float, column_norm_offset: float, x_norm_offset: float, y_norm_offset: float, z_norm_offset: float, row_norm_scale: float, column_norm_scale: float, x_norm_scale: float, y_norm_scale: float, z_norm_scale: float, row_numerator_poly: RSMPolynomial, row_denominator_poly: RSMPolynomial, column_numerator_poly: RSMPolynomial, column_denominator_poly: RSMPolynomial)[source]

Bases:

RSMSensorModelThis is an implementation of a Rational Polynomial Camera as defined in section 10.3.3.1.1 of the Manual of Photogrammetry Sixth Edition.

- world_to_image(geodetic_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This function transforms a geodetic world coordinate (longitude, latitude, elevation) into an image coordinate (x, y).

- Parameters:

geodetic_coordinate – the world coordinate (longitude, latitude, elevation)

- Returns:

the resulting image coordinate (x,y)

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function implements the image to world transform by iteratively invoking world to image within a minimization routine to find a matching image coordinate. The longitude and latitude parameters are searched independently while the elevation of the world coordinate comes from the surface provided with the ground domain.

- Parameters:

image_coordinate – the image coordinate (x, y)

elevation_model – an optional elevation model used transform the coordinate

options – optional hints, supports initial_guess and initial_search_distance

- Returns:

the corresponding world coordinate

- ground_domain_to_image(domain_coordinate: WorldCoordinate) ImageCoordinate[source]

This function implements the polynomial ground-to-image transform as defined by section 10.3.3.1.1 of the Manual of Photogrammetry sixth edition. The world coordinate is first normalized using the offsets and scale factors provided. Then the rational polynomial equations are run to produce an x,y image coordinate. Those components are then denormalized to find the final image coordinate.

- Parameters:

domain_coordinate – the ground domain coordinate (x, y, z)

- Returns:

the image coordinate (x, y)

- normalize_world_coordinate(world_coordinate: WorldCoordinate) WorldCoordinate[source]

This is a helper function used to normalize a world coordinate for use with the polynomials in this sensor model.

- Parameters:

world_coordinate – the world coordinate (longitude, latitude, elevation)

- Returns:

a world coordinate where each component has been normalized

- denormalize_world_coordinate(world_coordinate: WorldCoordinate) WorldCoordinate[source]

This is a helper function used to denormalize a world coordinate for use with the polynomials in this sensor model.

- Parameters:

world_coordinate – the normalized world coordinate (longitude, latitude, elevation)

- Returns:

a world coordinate

- normalize_image_coordinate(image_coordinate: ImageCoordinate) ImageCoordinate[source]

This is a helper function used to normalize an image coordinate for use in these polynomials.

- Parameters:

image_coordinate – the image coordinate (x, y)

- Returns:

the normalized image coordinate (x, y)

- denormalize_image_coordinate(image_coordinate: ImageCoordinate) ImageCoordinate[source]

This is a helper function to denormalize an image coordinate after it has been processed by the polynomials.

- Parameters:

image_coordinate – the normalized image coordinate (x, y)

- Returns:

the image coordinate (x, y)

- static normalize(value: float, offset: float, scale: float) float[source]

This function normalizes a value using an offset and scale using the equations defined in Section 7.2 of STDI-0002 Volume 1 Appendix U.

- Parameters:

value – the value to be normalized

offset – the normalization offset

scale – the normalization scale

- Returns:

the normalized value

- static denormalize(value: float, offset: float, scale: float) float[source]

This function denormalizes a value using an offset and scale using the equations defined in Section 7.2 of STDI-0002 Volume 1 Appendix U.

- Parameters:

value – the normalized value

offset – the normalization offset

scale – the normalization scale

- Returns:

the denormalized value

- class aws.osml.photogrammetry.RSMSectionedPolynomialSensorModel(context: RSMContext, row_num_image_sections: int, column_num_image_sections: int, row_section_size: float, column_section_size: float, row_polynomial: RSMLowOrderPolynomial, column_polynomial: RSMLowOrderPolynomial, section_sensor_models: List[List[SensorModel]])[source]

Bases:

RSMSensorModelThis is an implementation of a sectioned sensor model that splits overall RSM domain into multiple regions each serviced by a dedicated sensor model. A low complexity sensor model covering the entire domain is used to first identify the general region of the image associated with a world coordinate then the final coordinate transform is delegated to a sensor model associated with that section.

- world_to_image(geodetic_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This function transforms a geodetic world coordinate (longitude, latitude, elevation) into an image coordinate (x, y).

- Parameters:

geodetic_coordinate – the world coordinate (longitude, latitude, elevation)

- Returns:

the resulting image coordinate (x,y)

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function implements the image to world transform by selecting the sensor model responsible for coordinates in the image section and then delegating the image to world calculations to that sensor model.

- Parameters:

image_coordinate – the image coordinate (x, y)

elevation_model – optional elevation model used to transform the coordinate

options – optional dictionary of hints passed on to the section sensor models

- Returns:

the corresponding world coordinate (longitude, latitude, elevation)

- get_section_index(image_coordinate: ImageCoordinate) Tuple[int, int][source]

Use the equations from STDO-0002 Volume 1 Appendix U Section 6.3 to calculate the section of this image containing the rough x, y. Note that these equations are slightly different from the documentation since those equations produce section numbers that start with 1, and we’re starting with 0 to more naturally index into the array of sensor models. Note that if the value is outside the normal sections it is clamped to use the sensor model from the closest section available.

- Parameters:

image_coordinate – the image coordinate (x, y)

- Returns:

section index (x, y)

- class aws.osml.photogrammetry.SARImageCoordConverter(scp_pixel: ImageCoordinate, scp_ecf: WorldCoordinate, u_row: ndarray, u_col: ndarray, row_ss: float, col_ss: float, first_pixel: ImageCoordinate = ImageCoordinate(coordinate=array([0., 0.])))[source]

Bases:

objectThis class contains image grid and image plane coordinate conversions for a provided set of SICD parameters. The equations are mostly defined in Section 2 of the SICD Standard Volume 3.

- rowcol_to_xrowycol(row_col: ndarray) ndarray[source]

This function converts the row and column indexes (row, col) in the global image grid to SCP centered image coordinates (xrow, ycol) using equations (2) (3) in Section 2.2 of the SICD Specification Volume 3.

- Parameters:

row_col – the [row, col] location as an array

- Returns:

the [xrow, ycol] location as an array

- xrowycol_to_rowcol(xrow_ycol: ndarray) ndarray[source]

This function converts the SCP centered image coordinates (xrow, ycol) to row and column indexes (row, col) in the global image grid using equations (2) (3) in Section 2.2 of the SICD Specification Volume 3.

- Parameters:

xrow_ycol – the [xrow, ycol] location as an array

- Returns:

the [row, col] location as an array

- xrowycol_to_ipp(xrow_ycol: ndarray) ndarray[source]

This function converts SCP centered image coordinates (xrow, ycol) to a ECF coordinate, image plane point (IPP), on the image plane using equations in Section 2.4 of the SICD Specification Volume 3.

- Parameters:

xrow_ycol – the [xrow, ycol] location as an array

- Returns:

the image plane point [x, y, z] ECF location on the image plane

- ipp_to_xrowycol(ipp: ndarray) ndarray[source]

This function converts an ECF location on the image plane into SCP centered image coordinates (xrow, ycol) using equations in Section 2.4 of the SICD Specification volume 3.

- Parameters:

ipp – the image plane point [x, y, z] ECF location on the image plane

- Returns:

the [xrow, ycol] location as an array

- class aws.osml.photogrammetry.SICDSensorModel(coord_converter: SARImageCoordConverter, coa_projection_set: COAProjectionSet, u_spn: ndarray, side_of_track: str = 'L', u_gpn: ndarray | None = None)[source]

Bases:

SensorModelThis is an implementation of the SICD sensor model as described by SICD Volume 3 Image Projections Description NGA.STND.0024-3_1.3.0 (2021-11-30)

- static compute_u_spn(scp_ecf: WorldCoordinate, scp_arp: ndarray, scp_varp: ndarray, side_of_track: str) ndarray[source]

This helper function computes the slant plane normal.

- Parameters:

scp_ecf – Scene Center Point position in ECF coordinates

scp_arp – aperture reference point position

scp_varp – aperture reference point velocity

side_of_track – side of track imaged

- Returns:

unit vector for the slant plane normal

- static compute_u_gpn(scp_ecf: WorldCoordinate, u_row: ndarray, u_col: ndarray) ndarray[source]

- image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This is an implementation of an Image Grid to Scene point projection that first projects the image location to the R/RDot contour and then intersects the R/RDot contour with the elevation model. If an elevation model is provided then this routine intersects the R/Rdot contour with the DEM surface which may result in multiple solutions. In that case the solution with the lowest HAE is returned.

- Parameters:

image_coordinate – the x,y image coordinate

elevation_model – the optional elevation model, if none supplied a plane tangent to SCP is assumed

options – no additional options are supported at this time

- Returns:

the lon, lat, elev geodetic coordinate of the surface matching the image coordinate

- world_to_image(world_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This is an implementation of Section 6.1 Scene To Image Grid Projection for a single point.

- Parameters:

world_coordinate – lon, lat, elevation coordinate of the scene point

- Returns:

the x,y pixel location in this image

- class aws.osml.photogrammetry.SRTMTileSet(prefix: str = '', version: str = '1arc_v3', format_extension: str = '.tif')[source]

Bases:

DigitalElevationModelTileSetA tile set for SRTM content downloaded from the USGS website.

- find_tile_id(geodetic_world_coordinate: GeodeticWorldCoordinate) str | None[source]

This method creates tile IDs that match the file names for grid tiles downloaded using the USGS Earth Eplorer. It appears they are following a <latitude degrees>_<longitude degrees>_<resolution>_<version><format> convention. Examples:

n47_e034_3arc_v2.tif: 3 arc second resolution

n47_e034_1arc_v3.tif: 1 arc second resolution

- Parameters:

geodetic_world_coordinate – the world coordinate of interest

- Returns:

the tile path or None if the DEM does not have coverage for this location

- class aws.osml.photogrammetry.SensorModel[source]

Bases:

ABCA sensor model is an abstraction that maps the information in a georeferenced image to the real world. The concrete implementations of this abstraction will either capture the physical service model characteristics or more frequently an approximation of that physical model that allow users to transform world coordinates to image coordinates.

- abstract image_to_world(image_coordinate: ImageCoordinate, elevation_model: ElevationModel | None = None, options: Dict[str, Any] | None = None) GeodeticWorldCoordinate[source]

This function returns the longitude, latitude, elevation world coordinate associated with the x, y coordinate of any pixel in the image.

- Parameters:

image_coordinate – the x, y image coordinate

elevation_model – optional elevation model used to transform the coordinate

options – optional dictionary of hints that will be passed on to sensor models

- Returns:

GeodeticWorldCoordinate = the longitude, latitude, elevation world coordinate

- abstract world_to_image(world_coordinate: GeodeticWorldCoordinate) ImageCoordinate[source]

This function returns the x, y image coordinate associated with a given longitude, latitude, elevation world coordinate.

- Parameters:

world_coordinate – the longitude, latitude, elevation world coordinate

- Returns:

the x, y image coordinate

- class aws.osml.photogrammetry.SensorModelOptions(value)[source]

-

These are common options for SensorModel operations. Not all implementations will support these, but they are included here to encourage convention.

- INITIAL_GUESS = 'initial_guess'

- INITIAL_SEARCH_DISTANCE = 'initial_search_distance'

- class aws.osml.photogrammetry.WorldCoordinate(coordinate: _SupportsArray[dtype[Any]] | _NestedSequence[_SupportsArray[dtype[Any]]] | bool | int | float | complex | str | bytes | _NestedSequence[bool | int | float | complex | str | bytes] | None = None)[source]

Bases:

objectA world coordinate is a vector representing a position in 3D space. Note that this type is a simple value with 3 components that does not distinguish between geodetic or other cartesian coordinate systems (e.g. geocentric Earth-Centered Earth-Fixed or coordinates based on a local tangent plane).

- aws.osml.photogrammetry.geocentric_to_geodetic(ecef_world_coordinate: WorldCoordinate) GeodeticWorldCoordinate[source]

Converts a ECEF world coordinate (x, y, z) in meters into a (longitude, latitude, elevation) geodetic coordinate with units of radians, radians, meters.

- Parameters:

ecef_world_coordinate – the geocentric coordinate

- Returns:

the geodetic coordinate

- aws.osml.photogrammetry.geodetic_to_geocentric(geodetic_coordinate: GeodeticWorldCoordinate) WorldCoordinate[source]

Converts a geodetic world coordinate (longitude, latitude, elevation) with units of radians, radians, meters into a ECEF / geocentric world coordinate (x, y, z) in meters.

- Parameters:

geodetic_coordinate – the geodetic coordinate

- Returns:

the geocentric coordinate