Human Attribute Recognition

Recognize the attributes of the human body in the image, and return the human body position coordinates and attribute analysis in each area, including the semantic information of 16 attributes such as gender, age, and clothing.

Applicable scenarios

Applicable to scenarios such as smart security, smart retail, and pedestrian search.

List of attributes

| Name | Semantic |

|---|---|

| Upper wear | Short sleeve, Long sleeve |

| Lower wear | Shorts/Skirts, Pants/Skirts |

| Upper wear texture | Pattern, Solid, Stripe/Check |

| Backpack | Without bag, With bag |

| Whether to wear glasses | No, Yes |

| Whether to wear a hat | No, Yes |

| Body orientation | Front, Back, Left, Right |

| Truncate above | No, Yes |

| Truncate below | No, Yes |

| Occlusion | None, Light, Heavy |

| Whether to wear a mask | No, Yes |

| Gender | Male, Female |

| Age | Children, teenager, middle-aged, elderly |

| Smoking | No, Yes |

| Telephone | No, Yes |

| Whether to carry things | No, Yes |

API reference

-

HTTP request method:

POST -

Request body parameters

| Name | Type | Required | Description |

|---|---|---|---|

| url | String | Choose url or img. | Image URL address, which supports HTTP/HTTPS and S3 protocols. Supported image formats are jpg/jpeg/png/bmp, with the longest side not exceeding 4096px. |

| img | String | Choose url or img. | Base64 encoded image data. |

- Example JSON request

{

"url": "Image URL address"

}

{

"img": "Base64-encoded image data"

}

- Response parameters

| Name | Type | Description |

|---|---|---|

| Labels | List | List of human bodies recognized in the image. |

| upper_wear | Dict | Short Sleeve, Long Sleeve |

| upper_wear_texture | Dict | Pattern, Solid, Stripe/Check |

| lower_wear | Dict | Shorts/Skirts, Pants/Skirts |

| glasses | Dict | With glasses, without glasses |

| bag | Dict | With backpack, without backpack |

| headwear | Dict | With hat, without hat |

| orientation | Dict | left side, back side, front side, right side |

| upper_cut | Dict | with truncation, without truncation |

| lower_cut | Dict | with truncation, without truncation |

| occlusion | Dict | No Occlusion, Light Occlusion, Heavy Occlusion |

| face_mask | Dict | With mask, without mask |

| gender | Dict | Male, Female |

| age | Dict | Children, Teens, Middle-aged, Seniors |

| smoke | Dict | Smoking, non-smoking |

| cellphone | Dict | with telephone, without telephone |

| carrying_item | Dict | With or without carry |

| BoundingBox | Dict | Coordinate values of the human body in the image, including the percentage of top, left, width, height relative to the full screen |

| LabelModelVersion | String | Current model version number |

- Example JSON response

{

"Labels": [

{

"upper_wear": {

"短袖": 0.01,

"长袖": 99.99

},

"upper_wear_texture": {

"图案": 0,

"纯色": 99.55,

"条纹/格子": 0.45

},

"lower_wear": {

"短裤/裙": 0.15,

"长裤/裙": 99.85

},

"glasses": {

"有眼镜": 57.74,

"无眼镜": 42.26

},

"bag": {

"有背包": 0.69,

"无背包": 99.31

},

"headwear": {

"有帽": 97.02,

"无帽": 2.98

},

"orientation": {

"左侧面": 99.99,

"背面": 0,

"正面": 0,

"右侧面": 0.01

},

"upper_cut": {

"有截断": 0,

"无截断": 100

},

"lower_cut": {

"无截断": 0.18,

"有截断": 99.82

},

"occlusion": {

"无遮挡": 100,

"重度遮挡": 0,

"轻度遮挡": 0

},

"face_mask": {

"无口罩": 100,

"戴口罩": 0

},

"gender": {

"男性": 100,

"女性": 0

},

"age": {

"幼儿": 0,

"青少年": 100,

"中年": 0,

"老年": 0

},

"smoke": {

"吸烟": 0,

"未吸烟": 100

},

"cellphone": {

"使用手机": 0,

"未使用手机": 100

},

"carrying_item": {

"有手提物": 0.03,

"无手提物": 99.97

},

"BoundingBox": {

"Width": 0.11781848725818456,

"Height": 0.43450208474661556,

"Left": 0.5310931977771577,

"Top": 0.45263674786982644

}

},

...

],

"LabelModelVersion": "1.2.0"

}

API test

You can use the following tools (API explorer, Postman, cURL, Python, Java) to test calling APIs.

API Explorer

Prerequisites

When deploying the solution, you need to:

- set the parameter API Explorer to

yes. - set the parameter API Gateway Authorization to

NONE.

Otherwise, you can only view the API definitions in the API explorer, but cannot test calling API online.

Steps

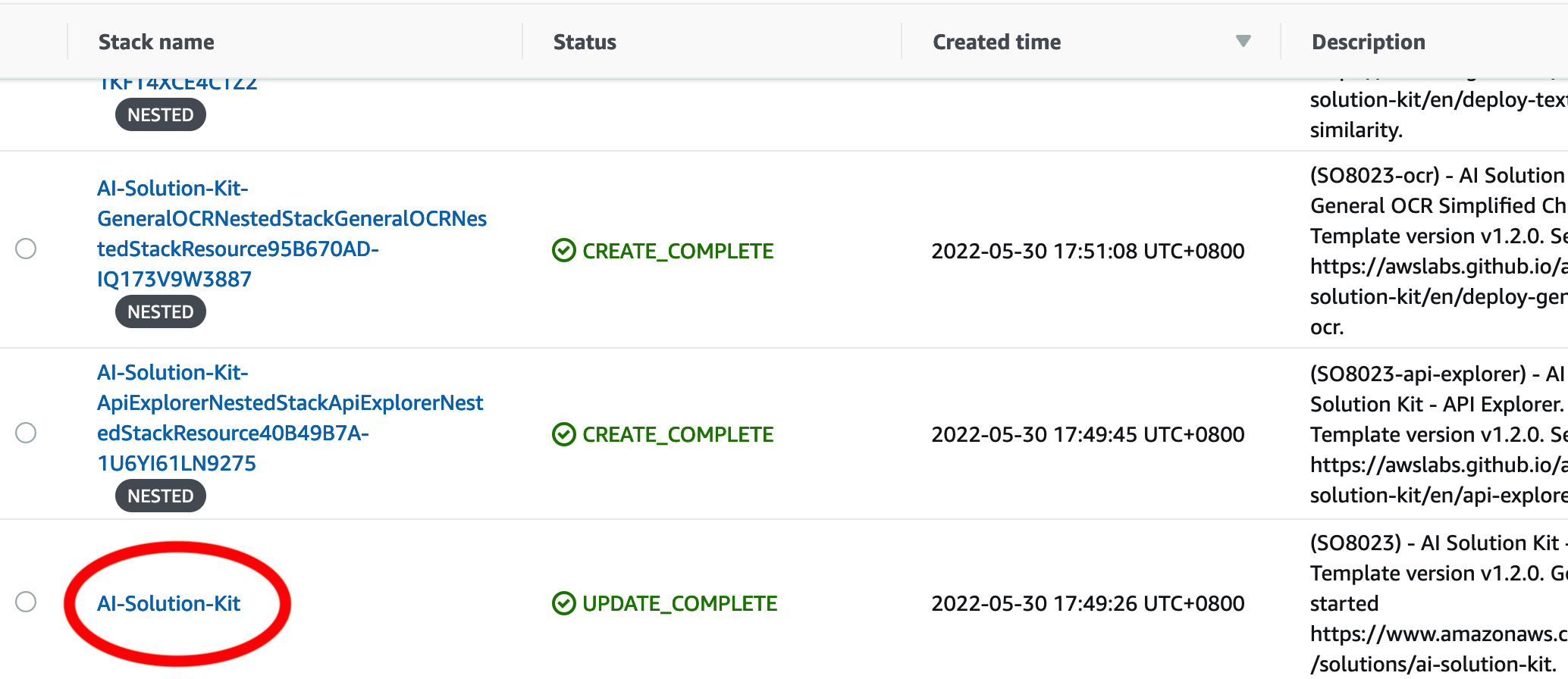

- Sign in to the AWS CloudFormation console.

-

On the Stacks page, select the solution’s root stack. Do not select the NESTED stack.

-

Choose the Outputs tab, and find the URL for APIExplorer.

-

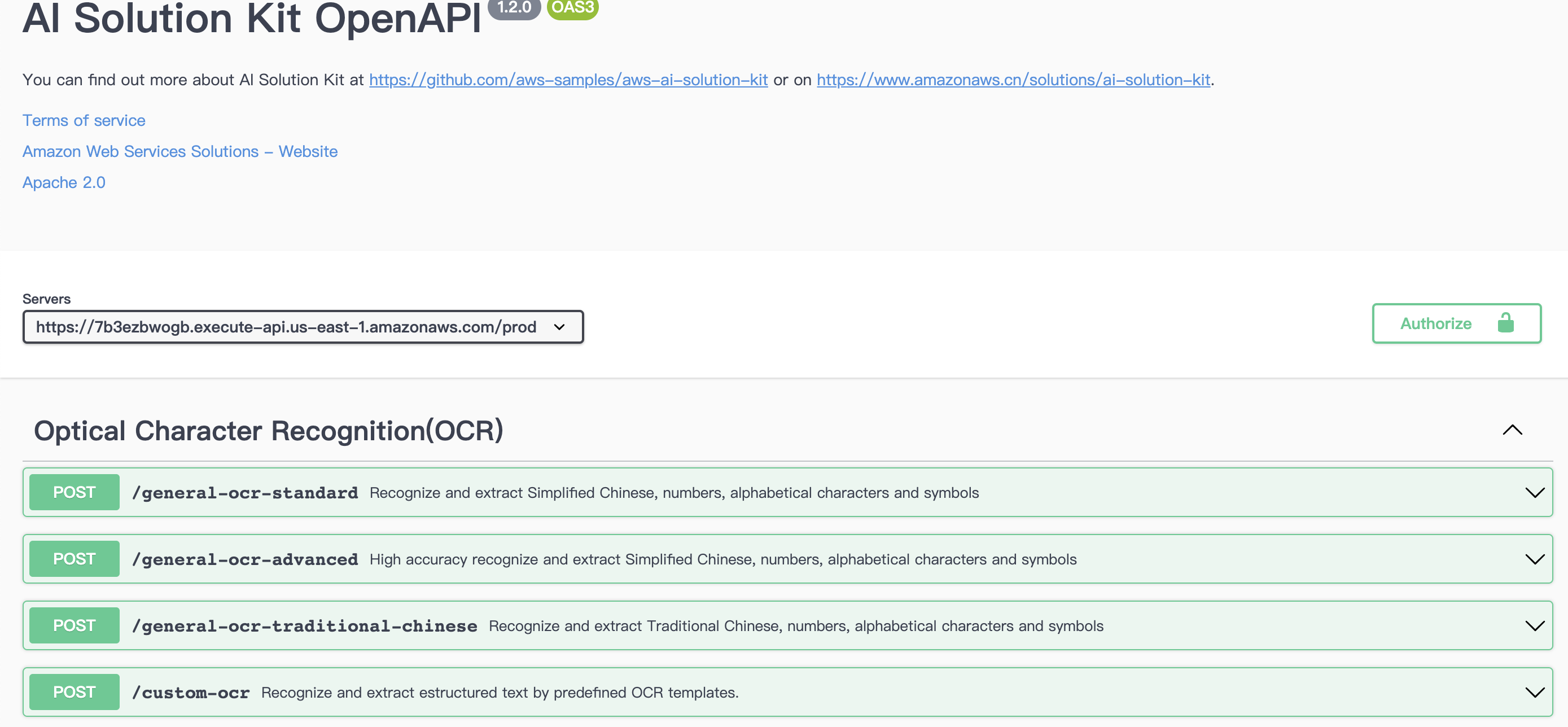

Click the URL to access the API explorer. The APIs that you have selected during deployment will be displayed.

-

For the API you want to test, click the down arrow to display the request method.

- Choose the Try it out button, and enter the correct Body data to test API and check the test result.

- Make sure the format is correct, and choose Execute.

- Check the returned result in JSON format in the Responses body. If needed, copy or download the result.

- Check the Response headers.

- (Optional) Choose Clear next to the Execute button to clear the request body and responses.

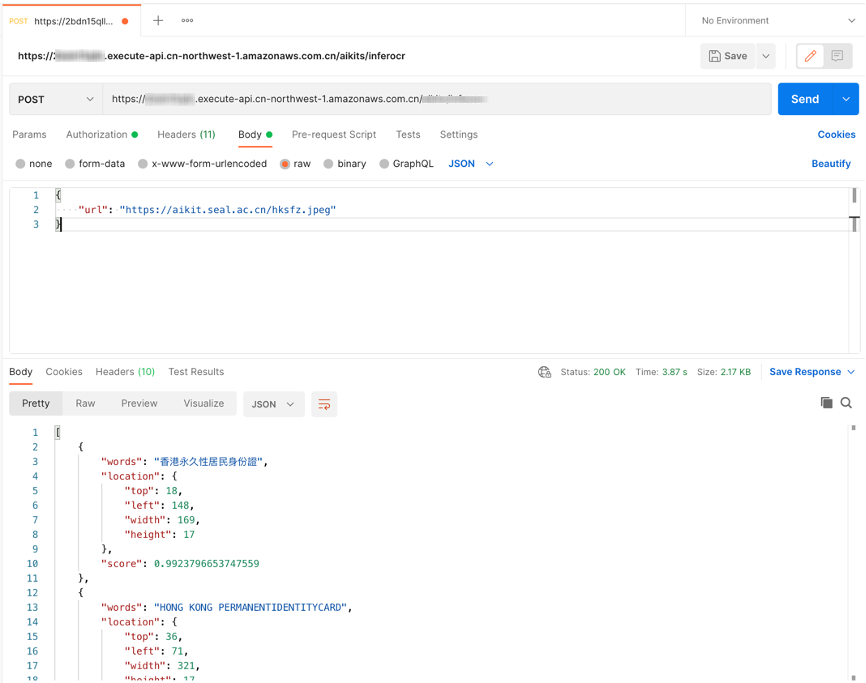

Postman (AWS_IAM Authentication)

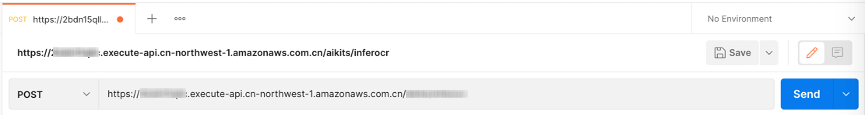

- Sign in to the AWS CloudFormation console.

- On the Stacks page, select the solution’s root stack.

- Choose the Outputs tab, and find the URL with the prefix

GeneralOCR. -

Create a new tab in Postman. Paste the URL into the address bar, and select POST as the HTTP call method.

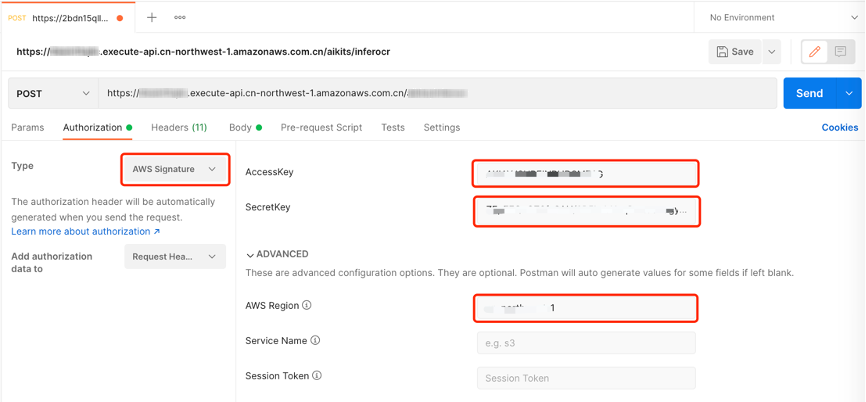

-

Open the Authorization configuration, select Amazon Web Service Signature from the drop-down list, and enter the AccessKey, SecretKey and Amazon Web Service Region of the corresponding account (such as cn-north-1 or cn-northwest-1 ).

-

Open the Body configuration item and select the raw and JSON data types.

- Enter the test data in the Body, and click the Send button to see the corresponding return results.

{

"url": "Image URL address"

}

cURL

- Windows

curl --location --request POST "https://[API_ID].execute-api.[AWS_REGION].amazonaws.com/[STAGE]/custom_ocr" ^

--header "Content-Type: application/json" ^

--data-raw "{\"url\": \"Image URL address\"}"

- Linux/MacOS

curl --location --request POST 'https://[API_ID].execute-api.[AWS_REGION].amazonaws.com/[STAGE]/custom_ocr' \

--header 'Content-Type: application/json' \

--data-raw '{

"url":"Image URL address"

}'

Python (AWS_IAM Authentication)

import requests

import json

from aws_requests_auth.boto_utils import BotoAWSRequestsAuth

auth = BotoAWSRequestsAuth(aws_host='[API_ID].execute-api.[AWS_REGION].amazonaws.com',

aws_region='[AWS_REGION]',

aws_service='execute-api')

url = 'https://[API_ID].execute-api.[AWS_REGION].amazonaws.com/[STAGE]/custom_ocr'

payload = {

'url': 'Image URL address'

}

response = requests.request("POST", url, data=json.dumps(payload), auth=auth)

print(json.loads(response.text))

Python (NONE Authentication)

import requests

import json

url = "https://[API_ID].execute-api.[AWS_REGION].amazonaws.com/[STAGE]/custom_ocr"

payload = json.dumps({

"url": "Image URL address"

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)

Java

OkHttpClient client = new OkHttpClient().newBuilder()

.build();

MediaType mediaType = MediaType.parse("application/json");

RequestBody body = RequestBody.create(mediaType, "{\n \"url\":\"Image URL address\"\n}");

Request request = new Request.Builder()

.url("https://xxxxxxxxxxx.execute-api.xxxxxxxxx.amazonaws.com/[STAGE]/custom_ocr")

.method("POST", body)

.addHeader("Content-Type", "application/json")

.build();

Response response = client.newCall(request).execute();

Cost estimation

You are responsible for the cost of using each Amazon Web Services service when running the solution. As of this revision, the main cost factors affecting the solution include.

- Amazon API Gateway calls

- Amazon API Gateway data output

- Amazon CloudWatch Logs storage

- Amazon Elastic Container Registry storage

If you choose an Amazon Lambda based deployment, the factors also include:

- Amazon Lambda invocations

- Amazon Lambda running time

If you choose an Amazon SageMaker based deployment, the factors also include:

- Amazon SageMaker endpoint node instance type

- Amazon SageMaker endpoint node data input

- Amazon SageMaker endpoint node data output

Cost estimation example 1

In AWS China (Ningxia) Region operated by NWCD (cn-northwest-1), process an image of 1MB in 1 second

The cost of using this solution to process the image is shown below:

| Service | Dimensions | Cost |

|---|---|---|

| AWS Lambda | 1 million invocations | ¥1.36 |

| AWS Lambda | 8192MB memory, 1 seconds run each time | ¥907.8 |

| Amazon API Gateway | 1 million invocations | ¥28.94 |

| Amazon API Gateway | 100KB data output each time, ¥0.933/GB | ¥93.3 |

| Amazon CloudWatch Logs | 10KB each time, ¥6.228/GB | ¥62.28 |

| Amazon Elastic Container Registry | 0.5GB storage, ¥0.69/GB each month | ¥0.35 |

| Total | ¥1010.06 |

Cost estimation example 2

In US East (Ohio) Region (us-east-2), process an image of 1MB in 1 seconds

The cost of using this solution to process this image is shown below:

| Service | Dimensions | Cost |

|---|---|---|

| AWS Lambda | 1 million invocations | $0.20 |

| AWS Lambda | 8192MB memory, 1 seconds run each time | $133.3 |

| Amazon API Gateway | 1 million invocations | $3.5 |

| Amazon API Gateway | 100KB data output each time, $0.09/GB | $9 |

| Amazon CloudWatch Logs | 10KB each time, $0.50/GB | $5 |

| Amazon Elastic Container Registry | 0.5GB存储,$0.1/GB each month | $0.05 |

| Total | $142.95 |

Uninstall the deployment

You can uninstall the Human Attribute Recognition feature via Amazon CloudFormation as described in Add or remove AI features and make sure the HumanAttributeRecognition parameter is set to no in the parameters section.

Note

Time to uninstall the deployment is approximately: 10 Minutes