S3DataCopy

Data copy from one bucket to another during deployment time.

Overview

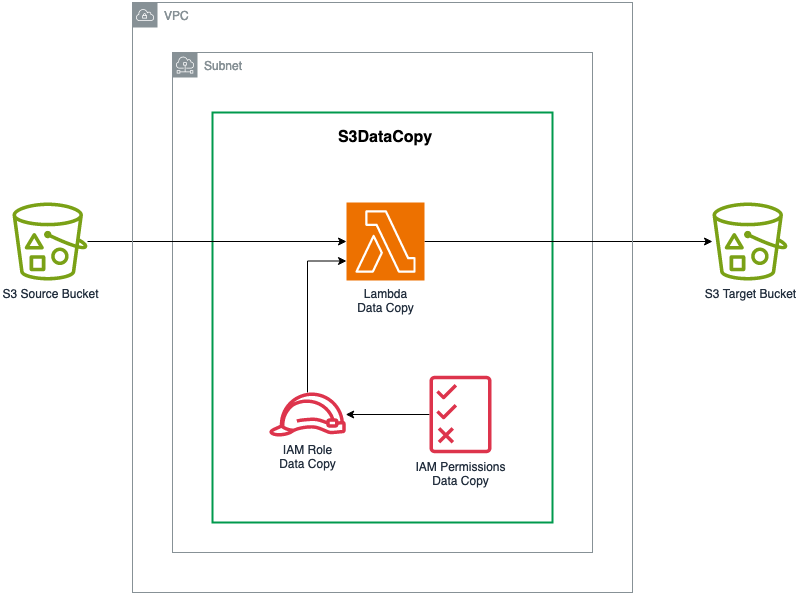

S3DataCopy construct provides a process to copy objects from one bucket to another during CDK deployment time:

- The copy is part of the CDK and CloudFormation deployment process. It's using a synchronous CDK Custom Resource running on AWS Lambda.

- The Lambda function is written in Typescript and copies objects between source and target buckets.

- The execution role used by the Lambda function is scoped to the least privileges. A custom role can be provided.

- The Lambda function can be executed in an Amazon VPC within private subnets. By default, it runs run inside VPCs owned by the AWS Lambda service.

- The Lambda function is granted read access on the source bucket and write access on the destination bucket using the execution role policy. The construct doesn't grant cross account access.

Usage

- TypeScript

- Python

class ExampleDefaultS3DataCopyStack extends cdk.Stack {

constructor(scope: Construct, id: string) {

super(scope, id);

const sourceBucket = Bucket.fromBucketName(this, 'sourceBucket', 'nyc-tlc');

const targetBucket = Bucket.fromBucketName(this, 'destinationBucket', 'staging-bucket');

new dsf.utils.S3DataCopy(this, 'S3DataCopy', {

sourceBucket,

sourceBucketPrefix: 'trip data/',

sourceBucketRegion: 'us-east-1',

targetBucket,

targetBucketPrefix: 'staging-data/',

});

}

}

class ExampleDefaultS3DataCopyStack(cdk.Stack):

def __init__(self, scope, id):

super().__init__(scope, id)

source_bucket = Bucket.from_bucket_name(self, "sourceBucket", "nyc-tlc")

target_bucket = Bucket.from_bucket_name(self, "destinationBucket", "staging-bucket")

dsf.utils.S3DataCopy(self, "S3DataCopy",

source_bucket=source_bucket,

source_bucket_prefix="trip data/",

source_bucket_region="us-east-1",

target_bucket=target_bucket,

target_bucket_prefix="staging-data/"

)

Private networks

The lambda Function used by the custom resource can be deployed in a VPC by passing the VPC and a list of private subnets.

Public subnets are not supported.

- TypeScript

- Python

const vpc = Vpc.fromLookup(this, 'Vpc', { vpcName: 'my-vpc'});

const subnets = vpc.selectSubnets({subnetType: SubnetType.PRIVATE_WITH_EGRESS});

new dsf.utils.S3DataCopy(this, 'S3DataCopy', {

sourceBucket,

sourceBucketPrefix: 'trip data/',

sourceBucketRegion: 'us-east-1',

targetBucket,

targetBucketPrefix: 'staging-data/',

vpc,

subnets,

});

vpc = Vpc.from_lookup(self, "Vpc", vpc_name="my-vpc")

subnets = vpc.select_subnets(subnet_type=SubnetType.PRIVATE_WITH_EGRESS)

dsf.utils.S3DataCopy(self, "S3DataCopy",

source_bucket=source_bucket,

source_bucket_prefix="trip data/",

source_bucket_region="us-east-1",

target_bucket=target_bucket,

target_bucket_prefix="staging-data/",

vpc=vpc,

subnets=subnets

)