DataCatalogDatabase

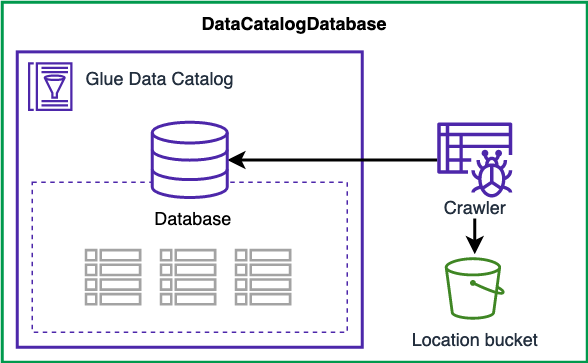

AWS Glue Catalog database for an Amazon S3 dataset.

Overview

DataCatalogDatabase is an AWS Glue Data Catalog Database configured for an Amazon S3 based dataset:

- The database default location is pointing to an S3 bucket location

s3://<locationBucket>/<locationPrefix>/ - The database can store various tables structured in their respective prefixes, for example:

s3://<locationBucket>/<locationPrefix>/<table_prefix>/ - By default, a database level crawler is scheduled to run once a day (00:01h local timezone). The crawler can be disabled and the schedule/frequency of the crawler can be modified with a cron expression.

- The permission model of the database can use IAM, LakeFormation or Hybrid mode.

The AWS Glue Data Catalog resources created by the DataCatalogDatabase construct are not encrypted because the encryption is only available at the catalog level. Changing the encryption at the catalog level has a wide impact on existing Glue resources and producers/consumers. Similarly, changing the encryption configuration at the catalog level after this construct is deployed can break all the resources created as part of DSF on AWS.

Usage

- TypeScript

- Python

class ExampleDefaultDataCatalogDatabaseStack extends cdk.Stack {

constructor(scope: Construct, id: string) {

super(scope, id);

const bucket = new Bucket(this, 'DataCatalogBucket');

new dsf.governance.DataCatalogDatabase(this, 'DataCatalogDatabase', {

locationBucket: bucket,

locationPrefix: '/databasePath',

name: 'example-db',

});

}

}

class ExampleDefaultDataCatalogDatabaseStack(cdk.Stack):

def __init__(self, scope, id):

super().__init__(scope, id)

bucket = Bucket(self, "DataCatalogBucket")

dsf.governance.DataCatalogDatabase(self, "DataCatalogDatabase",

location_bucket=bucket,

location_prefix="/databasePath",

name="example-db"

)

Using Lake Formation permission model

You can change the default permission model of the database to use Lake Formation exclusively or hybrid mode.

Changing the permission model to Lake Formation or Hybrid has the following impact:

- The CDK provisioning role is added as a Lake Formation administrator so it can perform Lake Formation operations

- The IAMAllowedPrincipal grant is removed from the database to enforce Lake Formation as the unique permission model (only for Lake Formation permission model)

Lake Formation and Hybrid permission models are configured using PutDataLakeSettings API call. Concurrent API calls can lead to throttling. If you create multiple DataCatalogDatabases, it's recommended to create dependencies between the dataLakeSettings that are exposed in each database to avoid concurrent calls. See the example in the DataLakeCatalogconstruct here

- TypeScript

- Python

class ExampleDefaultDataCatalogDatabaseStack extends cdk.Stack {

constructor(scope: Construct, id: string) {

super(scope, id);

const bucket = new Bucket(this, 'DataCatalogBucket');

new dsf.governance.DataCatalogDatabase(this, 'DataCatalogDatabase', {

locationBucket: bucket,

locationPrefix: '/databasePath',

name: 'example-db',

permissionModel: dsf.utils.PermissionModel.LAKE_FORMATION,

});

}

}

class ExampleDefaultDataCatalogDatabaseStack(cdk.Stack):

def __init__(self, scope, id):

super().__init__(scope, id)

bucket = Bucket(self, "DataCatalogBucket")

dsf.governance.DataCatalogDatabase(self, "DataCatalogDatabase",

location_bucket=bucket,

location_prefix="/databasePath",

name="example-db",

permission_model=dsf.utils.PermissionModel.LAKE_FORMATION

)

Modifying the crawler behavior

You can change the default configuration of the AWS Glue Crawler to match your requirements:

- Enable or disable the crawler

- Change the crawler run frequency

- Provide your own key to encrypt the crawler logs

- TypeScript

- Python

const encryptionKey = new Key(this, 'CrawlerLogEncryptionKey');

new dsf.governance.DataCatalogDatabase(this, 'DataCatalogDatabase', {

locationBucket: bucket,

locationPrefix: '/databasePath',

name: 'example-db',

autoCrawl: true,

autoCrawlSchedule: {

scheduleExpression: 'cron(1 0 * * ? *)',

},

crawlerLogEncryptionKey: encryptionKey,

crawlerTableLevelDepth: 3,

});

encryption_key = Key(self, "CrawlerLogEncryptionKey")

dsf.governance.DataCatalogDatabase(self, "DataCatalogDatabase",

location_bucket=bucket,

location_prefix="/databasePath",

name="example-db",

auto_crawl=True,

auto_crawl_schedule=cdk.aws_glue.CfnCrawler.ScheduleProperty(

schedule_expression="cron(1 0 * * ? *)"

),

crawler_log_encryption_key=encryption_key,

crawler_table_level_depth=3

)