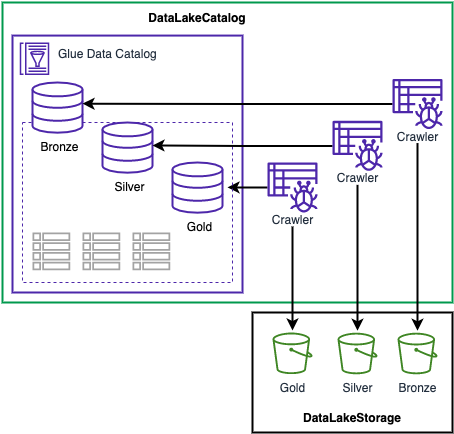

DataLakeCatalog

AWS Glue Catalog databases on top of a DataLakeStorage.

Overview

DataLakeCatalog is a data catalog for your data lake. It's a set of AWS Glue Data Catalog Databases configured on top of a DataLakeStorage.

The construct creates three databases pointing to the respective medallion layers (bronze, silve or gold) of the DataLakeStorage:

- The database default location is pointing to the corresponding S3 bucket location

s3://<locationBucket>/<locationPrefix>/ - By default, each database has an active crawler scheduled to run once a day (00:01h local timezone). The crawler can be disabled and the schedule/frequency of the crawler can be modified with a cron expression.

The AWS Glue Data Catalog resources created by the DataCatalogDatabase construct are not encrypted because the encryption is only available at the catalog level. Changing the encryption at the catalog level has a wide impact on existing Glue resources and producers/consumers. Similarly, changing the encryption configuration at the catalog level after this construct is deployed can break all the resources created as part of DSF on AWS.

Usage

- TypeScript

- Python

class ExampleDefaultDataLakeCatalogStack extends cdk.Stack {

constructor(scope: Construct, id: string) {

super(scope, id);

const storage = new dsf.storage.DataLakeStorage(this, 'MyDataLakeStorage');

new dsf.governance.DataLakeCatalog(this, 'DataCatalog', {

dataLakeStorage: storage,

});

}

}

class ExampleDefaultDataLakeCatalogStack(cdk.Stack):

def __init__(self, scope, id):

super().__init__(scope, id)

storage = dsf.storage.DataLakeStorage(self, "MyDataLakeStorage")

dsf.governance.DataLakeCatalog(self, "DataCatalog",

data_lake_storage=storage

)

Modifying the crawlers behavior for the entire catalog

You can change the default configuration of the AWS Glue Crawlers associated with the different databases to match your requirements:

- Enable or disable the crawlers

- Change the crawlers run frequency

- Provide your own key to encrypt the crawlers logs

The parameters apply to the three databases, if you need fine-grained configuration per database, you can use the DataCatalogDatabase construct.

- TypeScript

- Python

const encryptionKey = new Key(this, 'CrawlerLogEncryptionKey');

new dsf.governance.DataLakeCatalog(this, 'DataCatalog', {

dataLakeStorage: storage,

autoCrawl: true,

autoCrawlSchedule: {

scheduleExpression: 'cron(1 0 * * ? *)',

},

crawlerLogEncryptionKey: encryptionKey,

crawlerTableLevelDepth: 3,

});

encryption_key = Key(self, "CrawlerLogEncryptionKey")

dsf.governance.DataLakeCatalog(self, "DataCatalog",

data_lake_storage=storage,

auto_crawl=True,

auto_crawl_schedule=cdk.aws_glue.CfnCrawler.ScheduleProperty(

schedule_expression="cron(1 0 * * ? *)"

),

crawler_log_encryption_key=encryption_key,

crawler_table_level_depth=3

)