SSH Into Your HyperPod Cluster

Overview

SageMaker HyperPod clusters don't expose SSH ports directly to the internet for security reasons. Instead, you access cluster nodes through AWS Systems Manager (SSM) Session Manager, which provides secure, auditable access without requiring inbound firewall rules or bastion hosts.

This guide covers:

- Why SSM is required and how it works

- Setting up SSM with proper permissions

- Manual SSH configuration

- Using the easy_ssh.sh script to automate the process

Why AWS Systems Manager (SSM)?

AWS Systems Manager Session Manager provides several security and operational benefits:

- No inbound ports: Eliminates the need to open SSH ports (22) in security groups

- No bastion hosts: Removes the complexity and cost of managing jump servers

- Centralized access control: Uses IAM policies for fine-grained access control

- Audit logging: All session activity can be logged to CloudWatch and S3

- Encrypted connections: All traffic is encrypted in transit

- No key management: Can work without distributing SSH keys (though keys enable additional features)

Prerequisites

Before connecting to your HyperPod cluster, ensure:

- Your cluster status is

InService - You have AWS CLI configured with appropriate credentials

- You have the required IAM permissions (see IAM Permissions section)

To configure AWS CLI credentials, refer to Authentication and access credentials for the AWS CLI.

Step 1: Install SSM Session Manager Plugin

The Session Manager plugin enables the AWS CLI to start and end sessions that connect you to your managed instances.

- macOS (arm64)

- macOS (x86_64)

- Amazon Linux 2

- Ubuntu (SageMaker CodeEditor)

curl "https://s3.amazonaws.com/session-manager-downloads/plugin/latest/mac_arm64/sessionmanager-bundle.zip" -o "sessionmanager-bundle.zip"

unzip sessionmanager-bundle.zip

sudo ./sessionmanager-bundle/install -i /usr/local/sessionmanagerplugin -b /usr/local/bin/session-manager-plugin

curl "https://s3.amazonaws.com/session-manager-downloads/plugin/latest/mac/sessionmanager-bundle.zip" -o "sessionmanager-bundle.zip"

unzip sessionmanager-bundle.zip

sudo ./sessionmanager-bundle/install -i /usr/local/sessionmanagerplugin -b /usr/local/bin/session-manager-plugin

sudo yum install -y https://s3.amazonaws.com/session-manager-downloads/plugin/latest/linux_64bit/session-manager-plugin.rpm

sudo curl "https://s3.amazonaws.com/session-manager-downloads/plugin/latest/ubuntu_64bit/session-manager-plugin.deb" -o "/tmp/session-manager-plugin.deb"

sudo dpkg -i /tmp/session-manager-plugin.deb

Verify the installation:

session-manager-plugin --version

Step 2: Gather Cluster Information

You'll need three pieces of information to connect to your HyperPod cluster:

| Key | Example Value | How to obtain |

|---|---|---|

| Cluster ID | q2vei6nzqldz | Extract from cluster ARN in describe-cluster |

| Instance ID | i-08982ccd4b6b34eb1 | From list-cluster-nodes output |

| Node Group Name | controller-machine | From list-cluster-nodes output |

Get Cluster ID

aws sagemaker describe-cluster --cluster-name <your-cluster-name> --query 'ClusterArn' --output text

The cluster ID is the last part of the ARN after the final /.

Get Instance ID and Node Group Name

aws sagemaker list-cluster-nodes --cluster-name <your-cluster-name>

Step 3: Manual SSH Configuration

This section shows how to manually configure SSH access, giving you full control over the setup process.

3.1 Generate SSH Key Pair (if needed)

ssh-keygen -t rsa -b 4096 -f "$HOME/.ssh/id_rsa" -N ""

3.2 Connect via SSM and Add Your Public Key

First, connect to your cluster using the raw SSM command:

aws ssm start-session \

--target sagemaker-cluster:<cluster-id>_<node-group>-<instance-id> \

--region <your-region>

Once connected, you'll be logged in as root. Switch to the ubuntu user and add your public key:

# Switch to ubuntu user

sudo su - ubuntu

# Create .ssh directory if it doesn't exist

mkdir -p ~/.ssh

chmod 700 ~/.ssh

# Add your public key (replace with your actual public key)

echo "ssh-rsa AAAAB3NzaC1yc2EAAAA... your-public-key" >> ~/.ssh/authorized_keys

chmod 600 ~/.ssh/authorized_keys

# Exit the session

exit

exit

3.3 Configure SSH Client

Add the following configuration to your local ~/.ssh/config file:

cat <<EOF >> ~/.ssh/config

Host <your-cluster-name>

User ubuntu

ProxyCommand sh -c "aws ssm start-session --target sagemaker-cluster:<cluster-id>_<node-group>-<instance-id> --document-name AWS-StartSSHSession --parameters 'portNumber=%p'"

EOF

Replace the placeholders with your actual values:

<your-cluster-name>: A friendly name for your cluster<cluster-id>: Your cluster ID<node-group>: Your node group name<instance-id>: Your instance ID

3.4 Test SSH Connection

Now you can connect using standard SSH:

ssh <your-cluster-name>

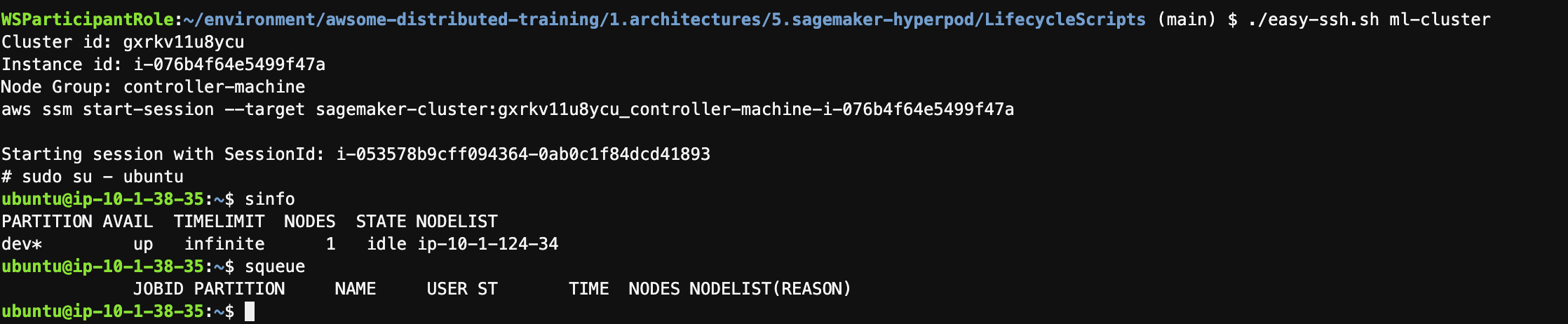

Step 4: Using the easy_ssh.sh Script (Automation Tool)

The easy_ssh.sh script automates the manual process described above. It's a convenience tool that:

- Automatically retrieves cluster information

- Sets up SSH configuration

- Adds your public key to the cluster

Download and Use the Script

# Download the script

curl -O https://raw.githubusercontent.com/aws-samples/awsome-distributed-training/main/1.architectures/5.sagemaker-hyperpod/easy-ssh.sh

chmod +x easy-ssh.sh

# Generate SSH key if you don't have one

ssh-keygen -t rsa -b 4096 -f "$HOME/.ssh/id_rsa" -N ""

# Run the script

./easy-ssh.sh -c <node-group> <cluster-name>

Example:

./easy-ssh.sh -c controller-machine ml-cluster

The script will prompt you to:

- Add the cluster to your

~/.ssh/config - Add your SSH public key to the cluster

Answer "yes" to both prompts for the full automated setup.

IAM Permissions

To access HyperPod clusters via SSM, users need specific IAM permissions. Here's a least-privilege policy example:

Minimum Required Permissions

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ssm:StartSession"

],

"Resource": [

"arn:aws:ssm:*:*:document/AWS-StartSSHSession",

"arn:aws:ssm:*:*:document/SSM-SessionManagerRunShell"

]

},

{

"Effect": "Allow",

"Action": [

"ssm:StartSession"

],

"Resource": "arn:aws:ssm:*:*:managed-instance/sagemaker-cluster:*",

"Condition": {

"StringLike": {

"ssm:resourceTag/sagemaker:cluster-name": "<your-cluster-name>"

}

}

},

{

"Effect": "Allow",

"Action": [

"ssm:TerminateSession",

"ssm:ResumeSession"

],

"Resource": "arn:aws:ssm:*:*:session/${aws:username}-*"

},

{

"Effect": "Allow",

"Action": [

"sagemaker:DescribeCluster",

"sagemaker:ListClusterNodes"

],

"Resource": "arn:aws:sagemaker:*:*:cluster/<your-cluster-name>"

}

]

}

Additional Permissions for Multi-User Environments

If you're setting up access for multiple users, consider these additional permissions:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ssm:SendCommand"

],

"Resource": [

"arn:aws:ssm:*:*:managed-instance/sagemaker-cluster:*",

"arn:aws:ssm:*:*:document/AWS-RunShellScript"

],

"Condition": {

"StringEquals": {

"ssm:resourceTag/sagemaker:cluster-name": "<your-cluster-name>"

}

}

}

]

}

- Principle of least privilege: Only grant the minimum permissions required

- Resource-specific access: Use conditions to limit access to specific clusters

- Regular audits: Review and rotate access permissions regularly

- Session logging: Enable CloudWatch logging for all SSM sessions

- User isolation: Consider using separate IAM roles for different user groups

Troubleshooting

Common Issues

Cluster not accessible:

An error occurred (TargetNotConnected) when calling the StartSession operation

- Verify cluster status is

InService:aws sagemaker describe-cluster --cluster-name <cluster-name> - Check your IAM permissions

- Ensure you're using the correct region

RunAs user errors:

Unable to start command: failed to start pty since RunAs user <username> does not exist

- This occurs when SSM Run-as-user is configured but the user doesn't exist on the target

- Disable Run-as-user in SSM preferences or create the user on the target instance

SSH connection refused:

ssh: connect to host <cluster-name> port 22: Connection refused

- Verify your SSH configuration in

~/.ssh/config - Ensure the ProxyCommand is correctly formatted

- Test the underlying SSM connection first

Expected Output

When the script runs successfully, you'll see output similar to:

=================================================

==== 🚀 HyperPod Cluster Easy SSH Script! 🚀 ====

=================================================

Cluster id: hyiyx2wvois6

Instance id: i-0744d68e03703e483

Node Group: controller-machine

Would you like to add ml-cluster to ~/.ssh/config (yes/no)?

> yes

✅ adding ml-cluster to ~/.ssh/config:

2. Do you want to add your SSH public key ~/.ssh/id_rsa.pub to the cluster (yes/no)?

> yes

Adding ... ssh-rsa ...

✅ Your SSH public key ~/.ssh/id_rsa.pub has been added to the cluster.

Now you can run:

$ ssh ml-cluster

Connecting to Your Cluster

After completing either the manual setup or using the easy_ssh.sh script, you can connect to your cluster:

ssh <your-cluster-name>

You'll be connected as the ubuntu user and can start running your workloads.

Advanced Configuration

Port Forwarding

You can forward ports through the SSM tunnel for accessing services running on your cluster:

ssh -L 8080:localhost:8080 <your-cluster-name>

SCP File Transfer

Copy files to/from your cluster using SCP:

# Copy file to cluster

scp local-file.txt <your-cluster-name>:~/

# Copy file from cluster

scp <your-cluster-name>:~/remote-file.txt ./

Multiple Node Access

To access different nodes in your cluster, you can create separate SSH configurations for each node by following the manual setup process with different instance IDs.