Mistral

Unstable API

0.8.0

@project-lakechain/bedrock-text-processors

The Mistral text processor allows you to leverage the Mistral and Mixtral large-language models provided by Mistral AI on Amazon Bedrock within your pipelines. Using this construct, you can use prompt engineering techniques to transform text documents, including, text summarization, text translation, information extraction, and more!

📝 Text Generation

To start using Mistral models in your pipelines, you import the MistralTextProcessor construct in your CDK stack, and specify the specific text model you want to use.

💁 The below example demonstrates how to use the Mistral text processor to summarize input documents uploaded to an S3 bucket.

import { S3EventTrigger } from '@project-lakechain/s3-event-trigger';import { MistralTextProcessor, MistralTextModel } from '@project-lakechain/bedrock-text-processors';import { CacheStorage } from '@project-lakechain/core';

class Stack extends cdk.Stack { constructor(scope: cdk.Construct, id: string) { const cache = new CacheStorage(this, 'Cache');

// Create the S3 event trigger. const trigger = new S3EventTrigger.Builder() .withScope(this) .withIdentifier('Trigger') .withCacheStorage(cache) .withBucket(bucket) .build();

// Transforms input documents using a Mistral model. const mistral = new MistralTextProcessor.Builder() .withScope(this) .withIdentifier('MistralTextProcessor') .withCacheStorage(cache) .withSource(source) .withModel(MistralTextModel.MIXTRAL_8x7B_INSTRUCT) .withPrompt(` Give a detailed summary of the text with the following constraints: - Write the summary in the same language as the original text. - Keep the original meaning, style, and tone of the text in the summary. `) .withModelParameters({ temperature: 0.3, max_tokens: 4096 }) .build(); }}🤖 Model Selection

You can select the specific Mistral model to use with this middleware using the .withModel API.

import { MistralTextProcessor, MistralTextModel } from '@project-lakechain/bedrock-text-processors';

const mistral = new MistralTextProcessor.Builder() .withScope(this) .withIdentifier('MistralTextProcessor') .withCacheStorage(cache) .withSource(source) .withModel(MistralTextModel.MISTRAL_LARGE) // 👈 Specify a model .withPrompt(prompt) .build();💁 You can choose amongst the following models — see the Bedrock documentation for more information.

| Model Name | Model identifier |

|---|---|

| MISTRAL_7B_INSTRUCT | mistral.mistral-7b-instruct-v0:2 |

| MIXTRAL_8x7B_INSTRUCT | mistral.mixtral-8x7b-instruct-v0:1 |

| MISTRAL_LARGE | mistral.mistral-large-2402-v1:0 |

| MISTRAL_LARGE_2 | mistral.mistral-large-2407-v1:0 |

| MISTRAL_SMALL | mistral.mistral-small-2402-v1:0 |

🌐 Region Selection

You can specify the AWS region in which you want to invoke Amazon Bedrock using the .withRegion API. This can be helpful if Amazon Bedrock is not yet available in your deployment region.

💁 By default, the middleware will use the current region in which it is deployed.

import { MistralTextProcessor, MistralTextModel } from '@project-lakechain/bedrock-text-processors';

const mistral = new MistralTextProcessor.Builder() .withScope(this) .withIdentifier('MistralTextProcessor') .withCacheStorage(cache) .withSource(source) .withRegion('eu-central-1') // 👈 Alternate region .withModel(MistralTextModel.MISTRAL_LARGE) .withPrompt(prompt) .build();⚙️ Model Parameters

You can optionally forward specific parameters to the underlying LLM using the .withModelParameters method. Below is a description of the supported parameters.

💁 See the Bedrock Inference Parameters for more information on the parameters supported by the different models.

| Parameter | Description | Min | Max | Default |

|---|---|---|---|---|

| temperature | Controls the randomness of the generated text. | 0 | 1 | Model dependant |

| maxTokens | The maximum number of tokens to generate. | 1 | Model dependant | N/A |

| topP | The cumulative probability of the top tokens to sample from. | 0 | 1 | Model dependant |

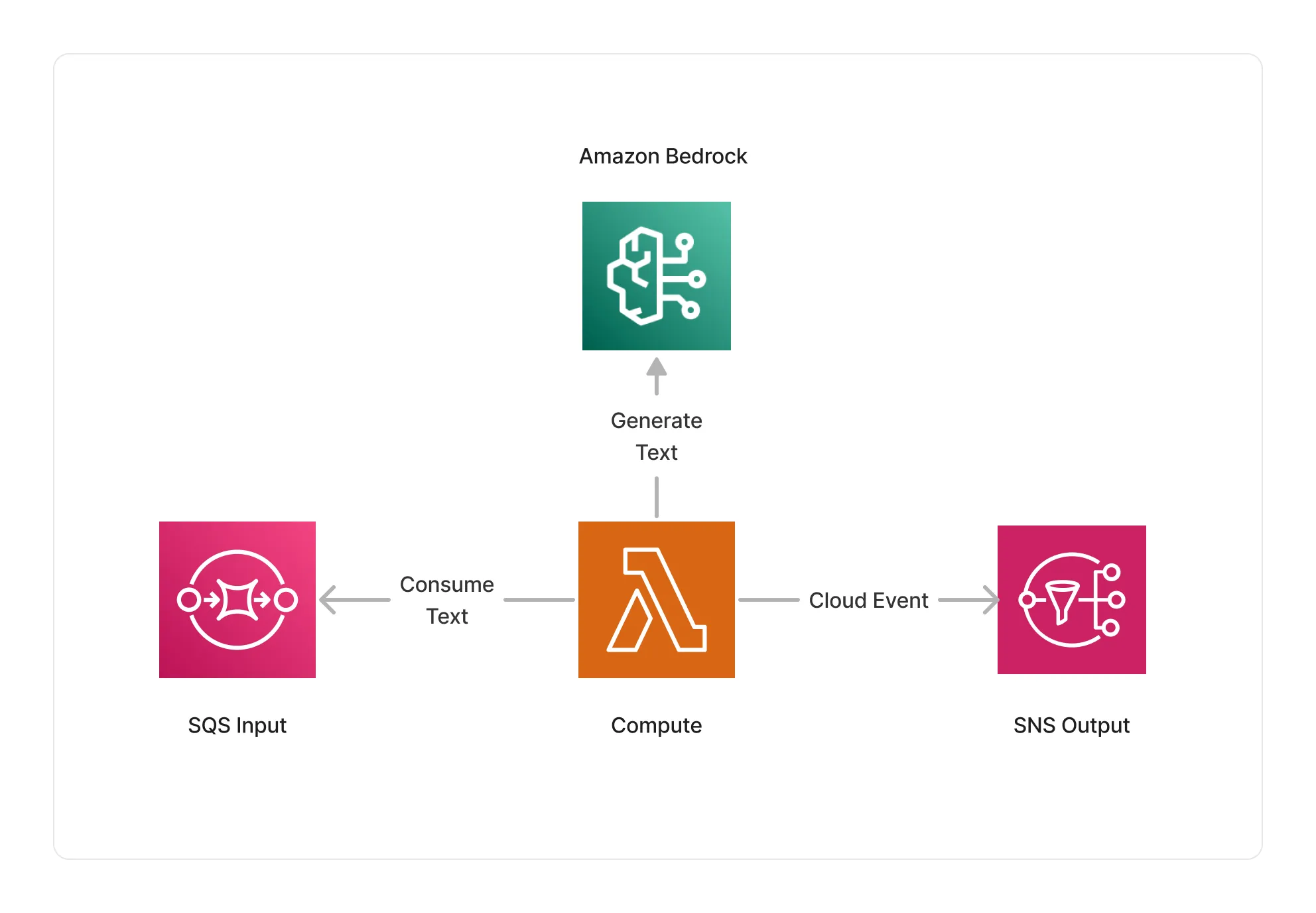

🏗️ Architecture

This middleware is based on a Lambda compute running on an ARM64 architecture, and integrate with Amazon Bedrock to generate text based on the given prompt and input documents.

🏷️ Properties

Supported Inputs

| Mime Type | Description |

|---|---|

text/plain | UTF-8 text documents. |

text/markdown | Markdown documents. |

text/csv | CSV documents. |

text/html | HTML documents. |

application/x-subrip | SubRip subtitles. |

text/vtt | Web Video Text Tracks (WebVTT) subtitles. |

application/json | JSON documents. |

application/xml | XML documents. |

Supported Outputs

| Mime Type | Description |

|---|---|

text/plain | UTF-8 text documents. |

Supported Compute Types

| Type | Description |

|---|---|

CPU | This middleware only supports CPU compute. |

📖 Examples

- Mistral Summarization Pipeline - Builds a pipeline for text summarization using Mistral models on Amazon Bedrock.