S3 Trigger

The S3 trigger starts processing pipelines based on Amazon S3 object events. Specifically, it monitors the creation, modification and deletion of objects in monitored bucket(s).

🪣 Monitoring Buckets

To use this middleware, you import it in your CDK stack and specify the bucket(s) you want to monitor.

ℹ️ The below example monitors a single bucket.

import { S3EventTrigger } from '@project-lakechain/s3-event-trigger';import { CacheStorage } from '@project-lakechain/core';

class Stack extends cdk.Stack { constructor(scope: cdk.Construct, id: string) { // Sample bucket. const bucket = new s3.Bucket(this, 'Bucket', {});

// The cache storage. const cache = new CacheStorage(this, 'Cache');

// Create the S3 event trigger. const trigger = new S3EventTrigger.Builder() .withScope(this) .withIdentifier('Trigger') .withCacheStorage(cache) .withBucket(bucket) .build(); }}You can also specify multiple buckets to be monitored by the S3 trigger by passing an array of S3 buckets to the .withBuckets method.

const trigger = new S3EventTrigger.Builder() .withScope(this) .withIdentifier('Trigger') .withCacheStorage(cache) .withBuckets([bucket1, bucket2]) .build();Filtering

It is also possible to provide finer grained filtering instructions to the withBucket method to monitor specific prefixes and/or suffixes.

const trigger = new S3EventTrigger.Builder() .withScope(this) .withIdentifier('Trigger') .withCacheStorage(cache) .withBucket({ bucket, prefix: 'data/', suffix: '.csv', }) .build();🗂️ Metadata

The S3 event trigger middleware makes it optionally possible to fetch the metadata associated with the S3 object, and enrich the created cloud event with the object metadata.

💁 Metadata retrieval is disabled by default, and can be enabled by using the

.withFetchMetadataAPI.

const trigger = new S3EventTrigger.Builder() .withScope(this) .withIdentifier('Trigger') .withCacheStorage(cache) .withBucket(bucket) .withFetchMetadata(true) .build();👨💻 Algorithm

The S3 event trigger middleware converts S3 native events into the CloudEvents specification and enriches the document description with required metadata, such as the mime-type, the size, and the Etag associated with the document.

All those information cannot be inferred from the S3 event alone, and to efficiently compile those metadata, this middleware uses the following algorithm.

- The Size, Etag, and URL of the S3 object are taken from the S3 event and added to the Cloud Event.

- If the object is a directory, it is ignored, as this middleware only processes documents.

- The middleware tries to infer the mime-type of the document from the object extension.

- If the mime-type cannot be inferred from the extension, we try to infer it from the S3 reported content type.

- If the mime-type cannot be inferred from the S3 reported content type, we try to infer it from the first bytes of the document using a chunked request.

- If the mime-type cannot be inferred at all, we set the mime-type to

application/octet-stream. - If S3 object metadata retrieval is enabled, the middleware will issue a request to S3 and enrich the Cloud Event with the object metadata.

📤 Events

This middleware emits Cloud Events whenever an object is created, modified, or deleted in the monitored bucket(s). Below is an example of events emitted by the S3 trigger middleware upon a creation (or modification), and a deletion of an object.

💁 Click to expand example

| Event Type | Example |

| Document Creation or Update |

|

| Document Deletion |

|

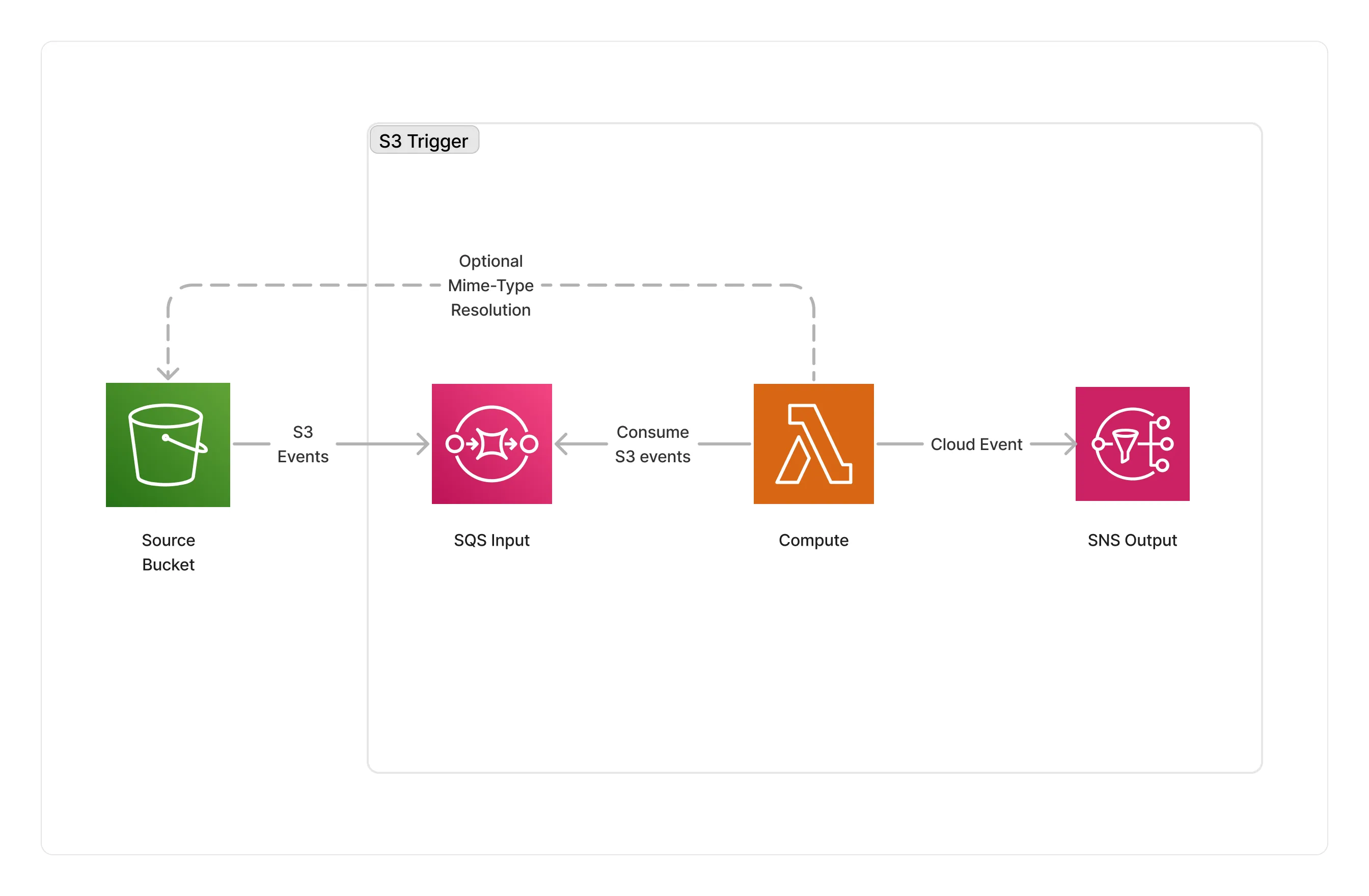

🏗️ Architecture

The S3 trigger receives S3 events from subscribed buckets on its SQS input queue. They are consumed by a Lambda function used to translate S3 events into a CloudEvent. The Lambda function also takes care of identifying the mime-type of a document based on its extension, the S3 reported mime-type, or the content of the document itself.

🏷️ Properties

Supported Inputs

This middleware does not accept any inputs from other middlewares.

Supported Outputs

| Mime Type | Description |

|---|---|

*/* | The S3 event trigger middleware can produce any type of document. |

Supported Compute Types

| Type | Description |

|---|---|

CPU | This middleware is based on a Lambda architecture. |

📖 Examples

- Face Detection Pipeline - An example showcasing how to build face detection pipelines using Project Lakechain.

- NLP Pipeline - Builds a pipeline for extracting metadata from text-oriented documents.

- E-mail NLP Pipeline - An example showcasing how to analyze e-mails using E-mail parsing and Amazon Comprehend.