FFMPEG

The FFMPEG processor brings the power of the FFMPEG Project within a Lakechain pipeline, allowing developers to process audio and video documents at scale. It makes it possible for developers to express their processing logic using a Funclet in their CDK code, which gets executed in the cloud at runtime.

The FFMPEG processor uses the fluent-ffmpeg library to describe the FFMPEG processing logic in a declarative syntax. Using this approach allows you to mock the processing logic in your local environment and replicate it within the funclet to execute it within a pipeline.

🎬 Using FFMPEG

To use this middleware, you import it in your CDK stack and instantiate it as part of a pipeline.

💁 Below is a simple example of using the FFMPEG processor to extract the audio track of an input video into a new document.

import { FfmpegProcessor, CloudEvent, FfmpegUtils, Ffmpeg} from '@project-lakechain/ffmpeg-processor';

class Stack extends cdk.Stack { constructor(scope: cdk.Construct, id: string) { const ffmpeg = new FfmpegProcessor.Builder() .withScope(this) .withIdentifier('FfmpegProcessor') .withCacheStorage(cache) .withVpc(vpc) .withSource(source) // 👇 FFMPEG funclet. .withIntent(async (events: CloudEvent[], ffmpeg: Ffmpeg, utils: FfmpegUtils) => { const videos = events.filter( (event) => event.data().document().mimeType() === 'video/mp4' ); return (ffmpeg() .input(utils.file(videos[0])) .noVideo() .save('output.mp3') ); }) .build(); }}✍️ Funclet Signature

Funclet expressions use the power of a full programming language to express a processing logic. They are asynchronous and can be defined as TypeScript named functions, anonymous functions, or arrow functions.

The funclet signature for the FFMPEG intent you want to execute is as follows.

type FfmpegFunclet = (event: CloudEvent[], ffmpeg: Ffmpeg: FfmpegUtils) => Promise<Ffmpeg>;Inputs

The FFMPEG funclet takes three arguments.

| Type | Description |

|---|---|

| CloudEvent[] | An array of CloudEvent, each describing an input document. The FFMPEG middleware can take one or multiple documents as an input. This makes it particularly powerful to process multiple audio and video documents together. |

| Ffmpeg | The Ffmpeg object implements the same interface as the fluent-ffmpeg library which you can use locally in a bespoke application, and then replicate the logic within the funclet. |

| FfmpegUtils | The FfmpegUtils object provides utility functions to interact with the documents and the file system. |

Outputs

A processing funclet must return a Promise that resolves to the fluent-ffmpeg instance created in the funclet. This allows the processing logic to be asynchronous, and ensures that the instance of the FFMPEG library is returned to the FFMPEG processor for collecting the produced files.

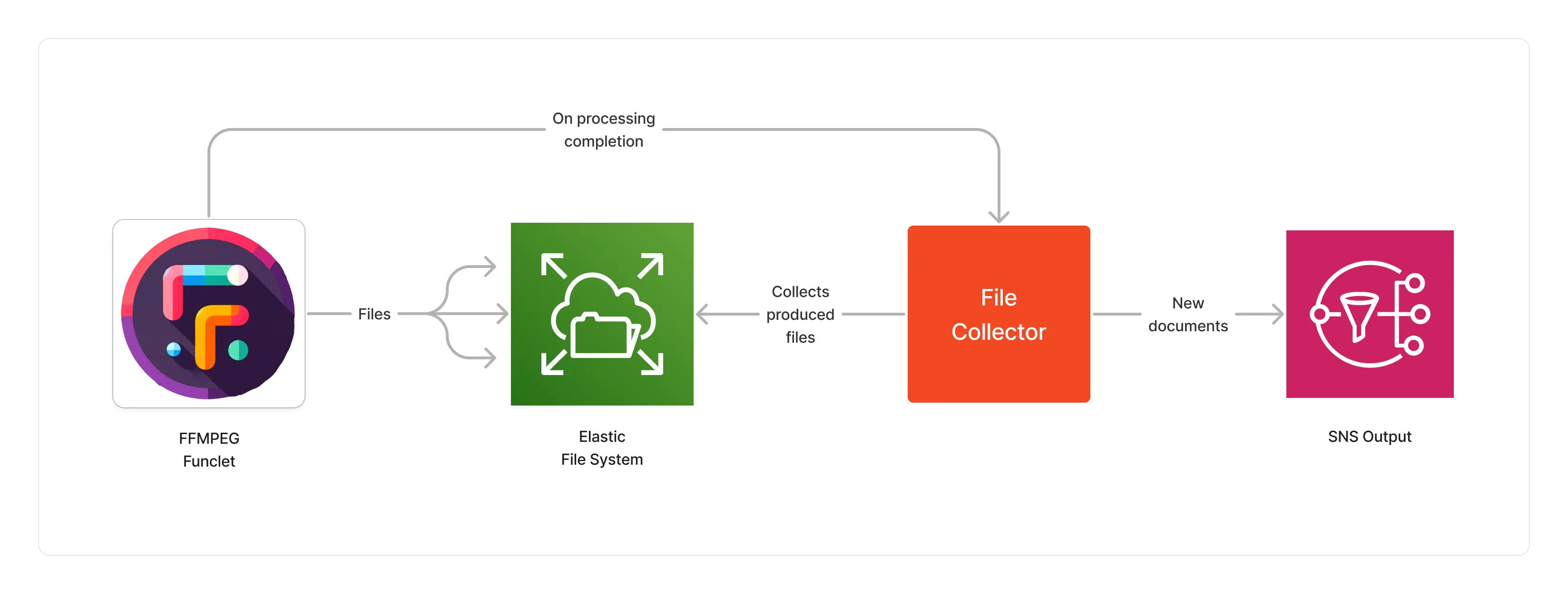

Each file created within the funclet by FFMPEG is written within a directory mounted on an AWS Elastic File System within the running container. At the end of the processing, all files are automatically collected by the FFMPEG processor, packaged into new documents, and passed to the next middlewares in the pipeline automatically!

💁 All files are also automatically removed from the EFS at the end of a funclet execution.

↔️ Auto-Scaling

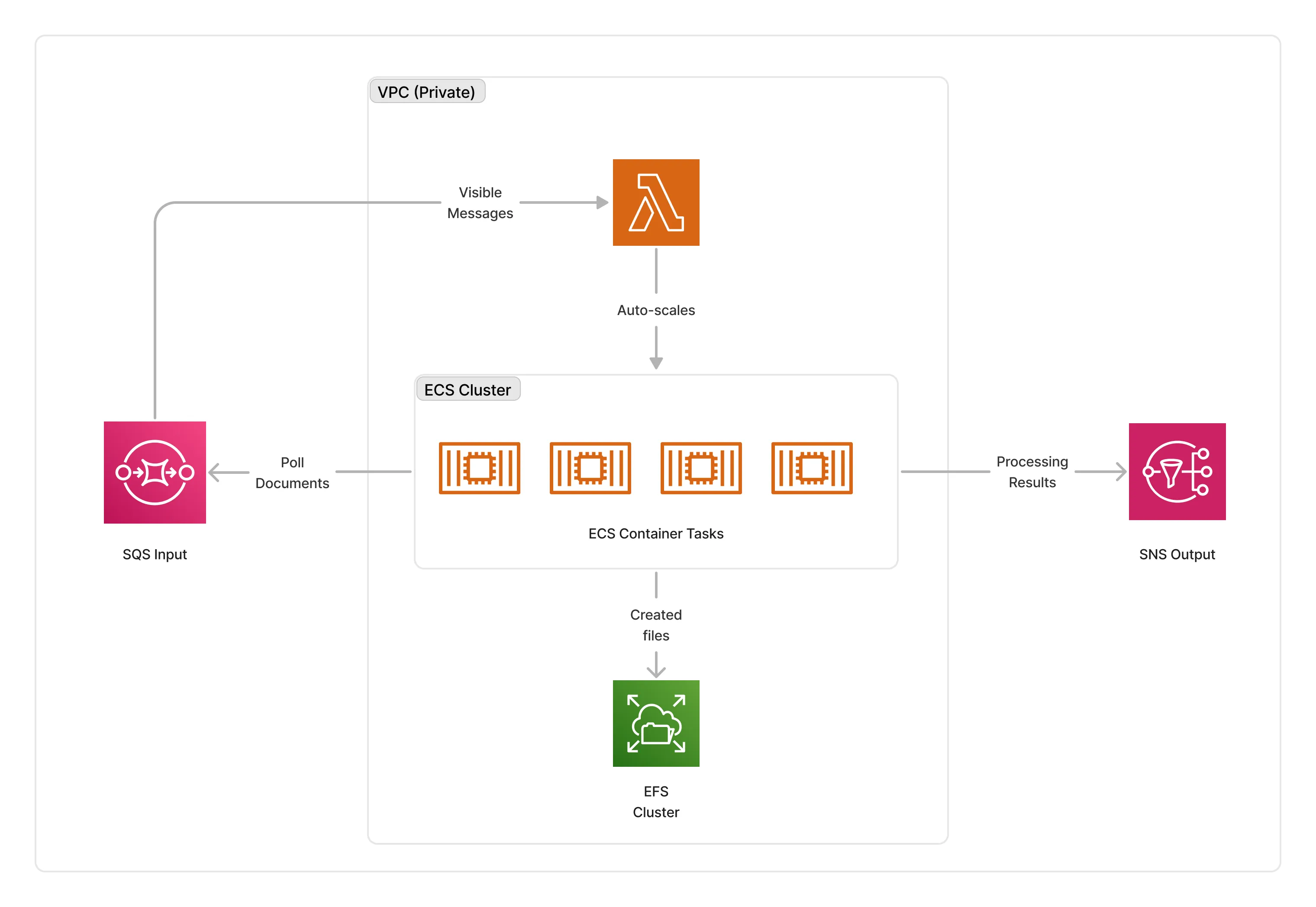

The cluster of containers deployed by this middleware will auto-scale based on the number of documents that need to be processed. The cluster scales up to a maximum of 5 instances by default, and scales down to zero when there are no documents to process.

ℹ️ You can configure the maximum amount of instances that the cluster can auto-scale to by using the

withMaxInstancesmethod.

import { FfmpegProcessor } from '@project-lakechain/ffmpeg-processor';

const ffmpeg = new FfmpegProcessor.Builder() .withScope(this) .withIdentifier('FfmpegProcessor') .withCacheStorage(cache) .withVpc(vpc) .withSource(source) .withIntent(intent) .withMaxInstances(10) // 👈 Maximum amount of instances .build();🌉 Infrastructure

You can customize the underlying infrastructure used by the FFMPEG processor by defining a custom infrastructure definition. You can opt to do so if you’d like to tweak the performance of the processor or the cost of running it.

💁 By default, the FFMPEG processor executes Docker containers on AWS ECS using

c6a.xlargeinstances.

import * as ec2 from 'aws-cdk-lib/aws-ec2';import { FfmpegProcessor, InfrastructureDefinition } from '@project-lakechain/ffmpeg-processor';

const ffmpeg = new FfmpegProcessor.Builder() .withScope(this) .withIdentifier('FfmpegProcessor') .withCacheStorage(cache) .withVpc(vpc) .withSource(source) .withIntent(intent) .withInfrastructure(new InfrastructureDefinition.Builder() .withMaxMemory(15 * 1024) .withGpus(0) .withInstanceType(ec2.InstanceType.of( ec2.InstanceClass.C6A, ec2.InstanceSize.XLARGE2 )) .build()) .build();🏗️ Architecture

The FFMPEG processor runs on an ECS cluster of auto-scaled instances, and packages FFMPEG and the code required to integrate it with Lakechain into a Docker container.

The files created within the user-provided funclet are stored on an AWS Elastic File System (EFS) mounted on the container.

🏷️ Properties

Supported Inputs

| Mime Type | Description |

|---|---|

video/* | The FFMPEG processor supports all video types supported by FFMPEG. |

audio/* | The FFMPEG processor supports all audio types supported by FFMPEG. |

application/cloudevents+json | The FFMPEG processor supports composite events. |

Supported Outputs

| Mime Type | Description |

|---|---|

video/* | The condition middleware can produce any video type supported by FFMPEG. |

audio/* | The condition middleware can produce any audio type supported by FFMPEG. |

Supported Compute Types

| Type | Description |

|---|---|

CPU | This middleware only supports CPU compute. |

📖 Examples

- Building a Generative Podcast - Builds a pipeline for creating a generative weekly AWS news podcast.

- Building a Video Chaptering Service - Builds a pipeline for automatic video chaptering generation.